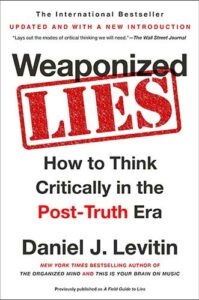

Daniel J. Levitin (@danlevitin) is a cognitive psychologist, neuroscientist, musician, record producer, and author of Weaponized Lies: How to Think Critically in the Post-Truth Era. [Note: This is a previously broadcast episode from the vault that we felt deserved a fresh pass through your earholes!]

What We Discuss with Daniel J. Levitin:

- The most important quality for anyone who wants to be an evidence-based, critical thinker.

- Why even society’s smartest people fall victim to misinformation.

- The first step to making good decisions.

- How to dismantle bad arguments on the fly.

- Why you need to look beyond averages when making a case.

- And much more…

Like this show? Please leave us a review here — even one sentence helps! Consider including your Twitter handle so we can thank you personally!

Our guest today is cognitive psychologist and neuroscientist Daniel Levitin, who explains that he wrote Weaponized Lies: How to Think Critically in the Post-Truth Era “to help everyone make better decisions and to think more effectively about the information they encounter in day-to-day life.”

Listen to this episode in its entirety to learn more about the pervasiveness of pseudo-expertise, why even educated people succumb to quackery, why it’s important to remember that correlation does not imply causation, why we shouldn’t feel dumb if we can’t wrap our heads around statistics (but how we can train ourselves to be better), where to find superb examples of misleading graphs in abundance, how a Mark Twain quote that ain’t so gets passed around among people who should know better (Daniel included), how Nicolas Cage movies correlate with people who drown in swimming pools, the importance of arguing against our own point of view, and lots more. Listen, learn, and enjoy! [Note: This is a previously broadcast episode from the vault that we felt deserved a fresh pass through your earholes!]

Please Scroll Down for Featured Resources and Transcript!

Please note that some of the links on this page (books, movies, music, etc.) lead to affiliate programs for which The Jordan Harbinger Show receives compensation. It’s just one of the ways we keep the lights on around here. Thank you for your support!

Sign up for Six-Minute Networking — our free networking and relationship development mini course — at jordanharbinger.com/course!

This Episode Is Sponsored By:

- Starbucks: Try Starbucks Tripleshot energy today

- SeekR: Go to seekr.com to learn how you can make better decisions with access to better information

- Bambee: Schedule your free HR audit at bambee.com/jordan

- BetterHelp: Get 10% off your first month at betterhelp.com/jordan

- Zelle: Learn more at zellepay.com

Miss the show we did with award-winning cybersecurity journalist Nicole Perlroth? Catch up with episode 542: Nicole Perlroth | Who’s Winning the Cyberweapons Arms Race? here!

Thanks, Daniel J. Levitin!

If you enjoyed this session with Daniel J. Levitin, let him know by clicking on the link below and sending him a quick shout out at Twitter:

Click here to thank Daniel J. Levitin at Twitter!

Click here to let Jordan know about your number one takeaway from this episode!

And if you want us to answer your questions on one of our upcoming weekly Feedback Friday episodes, drop us a line at friday@jordanharbinger.com.

Resources from This Episode:

- Weaponized Lies: How to Think Critically in the Post-Truth Era by Daniel J. Levitin | Amazon

- The Organized Mind: Thinking Straight in the Age of Information Overload by Daniel J. Levitin | Amazon

- Daniel Levitin | Website

- Daniel Levitin | Twitter

- Sally Clark is Wrongly Convicted of Murdering Her Children | Bayesians Without Borders

- The Crash and Burn of an Autism Guru | The New York Times Magazine

- The Undoing Project: A Friendship That Changed Our Minds by Michael Lewis | Amazon

- Michael Shermer | Why We Believe Weird Things | Jordan Harbinger

- Sam Harris | Making Sense of the Present Tense | Jordan Harbinger

- Trump’s ‘Dangerous Disability’? It’s the Dunning-Kruger Effect | Bloomberg View

- The Big Short | Prime Video

- An Inconvenient Truth | Prime Video

- The 5 Elements of Effective Thinking by Edward B. Burger and Michael Starbird | Amazon

- Jonah Ryan | Veep

- The Definitive Fact-Checking Site and Reference Source for Urban Legends, Folklore, Myths, Rumors, and Misinformation | Snopes

- Independent Fact-Checking | PolitiFact

662: Daniel J. Levitin | How to Think Critically in the Post-Truth Era

[00:00:00] Jordan Harbinger: Special thanks to the new Starbucks Baya Energy drink for sponsoring this show. With caffeine naturally found in coffee fruit, it's energy that's good.

[00:00:06] Coming up next on The Jordan Harbinger Show.

[00:00:09] Daniel J. Levitin: My favorite example is that the number of people who die from getting entangled in their bedsheets is correlated with the per capita consumption of cheese. So I suppose you could spin a story that people who want to die by strangulation in their bedsheets, decide to have one last rich meal of cheese. And so they go out and buy a whole lot of it. Or maybe people eat a whole bunch of cheese and they get into a dairy-induced stupor and end up strangling themselves. But more likely these two things are unrelated.

[00:00:44] Jordan Harbinger: Welcome to the show. I'm Jordan Harbinger. On The Jordan Harbinger Show, we decode the stories, secrets, and skills of the world's most fascinating people. We have in-depth conversations with scientists, entrepreneurs, spies, and psychologists, even the occasional four-star general, Russian spy, or hostage negotiator. And each episode turns our guests' wisdom into practical advice that you can use to build a deeper understanding of how the world works and become a better thinker.

[00:01:09] If you're new to the show, or you want to tell your friends about it — and I always appreciate it when you do that — our starter packs are the place to begin. These are collections of our favorite episodes, organized by topic. They'll help new listeners get a taste of everything we do here on the show — topics like persuasion, influence, abnormal psychology, China and North Korea, criminal justice and law enforcement, scams and conspiracy debunks, crime and cults, and more. Just visit jordanharbinger.com/start or search for us in your Spotify app to get started.

[00:01:39] Today, we're talking with Daniel. He's the author of Weaponized Lies, great title, by the way. We're living in an age of information overload. We're constantly bombarded by info, right? It's getting harder and harder to tell what's true and what's not. Misinformation, disinformation, irresponsible information — we're going to try and help everyone make better decisions and to think more effectively about the information you encounter in your day-to-day life. Today, we'll explore critical thinking techniques involving experts, pseudo-experts, data, charts, words, and numbers. And we'll learn some techniques to think more critically and dismantle arguments on the fly, and last but not least, we'll find out why the average person only has one testicle and what that means for you. So enjoy this episode with Daniel Levitin.

[00:02:23] A lot of folks, when they think about these topics, they think, "Yeah, you know what? Other people sure are dumb and they get misled a lot. But I looked at this newspaper article the other day and it said that half of all humans only have one testicle. That was shocking. We need to do something about that." That actually, of course, is almost true, given that half the population has no testicles. These kinds of things are not always so simple, but I think that even people who are educated, smart, and think that they think critically, sometimes are the ones that fall victim to this stuff even more.

[00:02:58] Daniel J. Levitin: I think the most important quality to have, if you want to be an evidence-based thinker — a critical thinker — is humility. If you realize that you don't know everything and you're open-minded enough to take in new information, you can save yourself a lot of trouble. The most dangerous thing is somebody who is so sure they know something, but they're wrong. And then they go off headstrong into the abyss, thinking that they're absolutely right, and disaster can result.

[00:03:28] You hear stories every once in a while about somebody who puts jet engines on their car, because they think it'll make it go fast. And they're so sure it's going to work, but they haven't thought ahead to what will happen once the car has lifted up off the ground, how are they going to steer this? And a number of famous disasters, the challenger explosion, the Exxon Valdez, the Fukushima power plant can be chocked up in part to overconfidence, to not realizing that we don't know everything we think we do.

[00:03:58] Jordan Harbinger: And that's a little scary, especially in an age where information is constantly bombarding us. We haven't necessarily evolved the tools to try to think critically at high speed about everything that's coming at us and requires a quick decision and things like that. And this is true from social media, when we're looking at our Facebook, all the way to discussions we're having with people who are maybe deceiving us by accident, simply because they're repeating something they heard from somebody else, and it doesn't have to be false facts.

[00:04:26] The problem is sometimes the facts themselves can be true but they're skewed. They're skewed deliberately or by accident using statistics or math or anecdotal evidence instead of empirical evidence, and that's a big problem. I noticed in Weaponized Lies your book, that critical thinking when it comes to what we're hearing and reading, it's really all around us and it has been since I was a kid. I mean, even those commercials, four out of five dentists approve of this toothbrush, even that stuff is just kind of ubiquitous. And now, it's maybe a little bit more nefarious, not just used to sell toothbrushes but to sell political ideas.

[00:05:03] Daniel J. Levitin: The problem is that critical thinking is hard. We didn't evolve brains to think about the kinds of data that we are encountering these days, statistical data, big data. We evolved to deal with things like rocks rolling down hills and warring tribes coming at us. And I think the first step to making good decisions in any domain that you make decisions in — whether it's your finances or relationships, or try to choose a job, all of those things — the first step is to realize that thinking about this stuff is hard and we have to take some time to do it, and we have to practice doing it and recognizing our failings can help us to avoid the pitfalls.

[00:05:47] You mentioned skewed data, and it got me to thinking, you know, one of the tools I try to provide in the book and I'd like to share with people who are listening today is that often you'll find, if you go and look into it, the data are true, but they're completely irrelevant to the point that's tried to be made. One of my favorite examples of this is the finding that was published after my book came out. Studies showed that the number of books read by American schoolchildren falls every year after second grade. The number of books read per year declines for every year after second grade. What you're led to believe is that either students are slothful and lazy or that — what's going on in the schools that they're not teaching our students discipline and good study habits? It's the collapse of the modern educational system?

[00:06:40] All these things might be implications and unless you stop and you think, "Wait a minute. Maybe the number of books read per year is not really relevant to any of these issues." In second grade, you're reading short little books. You know, they might be 10 or 15 pages long. By the time you're in junior high school, you're reading Lord of the Flies, 195 pages. By the time you're a freshman in college, you might be reading War and Peace, 1200 pages. Number of books is not the relevant metric if you want to figure out how scholarly students are.

[00:07:10] Jordan Harbinger: That's interesting for me because I essentially didn't read until my 30s, other than school books, but I went to law school. So if you ask me how much I read before age 30, I would say, "Almost nothing," but the truth is I read probably more than any normal person ever would. I just read legal cases and other stuff like that.

[00:07:29] So it really does matter the data that you're looking at. And of course, you're not necessarily withholding data on purpose, we're just not necessarily asking the right questions. And in Weaponized Lies, you do talk about some of those questions. And I'm specifically going to leave out the math and probability notes on this I would say because we don't need to prove everything today here on the show. And people can get the same info in Weaponized Lies if they need it. I want to focus on the ideas and the example.

[00:07:53] So are there five or six, maybe major categories we see as humans with media manipulation. We see companies manipulating us, for example. Why don't we just start there? I feel like marketing and having companies manipulate us has been par for the course for so long. What are some of the most common examples you see of that, that most people maybe don't see or notice?

[00:08:15] Daniel J. Levitin: I think in general, yeah, there are some big categories of ways that we can be manipulated by companies, by the government. One of them is the lack of a control group, and this is a concept borrowed from science, but you might read claims for echinacea that it helps to fend off a cold. You feel a cold coming on, you take echinacea, and then maybe four or five days later, you're completely better. There's no evidence at all that echinacea can help fend off a cold. What you need is a controlled study. You need people who are all coming down with colds and you give half echinacea and you give half of them a pill that looks exactly like it. That's what's called the control group. And you don't tell anybody, which is which so their expectations don't factor into it. If you do that, it turns out echinacea has no effect.

[00:09:00] But there are a lot of claims made where there's a missing control group and it's not just medicines. It's things like, "Oh, if your parents read to you as a child, you're likely to do better." But we don't know how well or poorly you would've done if your parents didn't read to you as a child. It's not controlled, you see. A second category is the relevant data that we were just talking about with the books read example. A third category is claims that are asking you to believe one thing but if you look carefully at the language, it might raise your suspicions. A lot of claims are vague or misleading, and they're intended to be that way. Other times the people telling you these things just don't know the difference themselves. You mentioned four out of five dentists, maybe we can dig into that a little bit.

[00:09:50] Jordan Harbinger: Sure. Yeah, exactly.

[00:09:52] Daniel J. Levitin: So there was a claim that four out of five dentists recommend Colgate. This was a big ad campaign. Now, if you're a critical thinker, I mean, there are a number of questions you'd want to ask here. Like, who are these dentists? Do they still have their medical licenses? Are they getting money from Colgate? Another question is how many dentists did they ask? Did they literally ask just five or did they ask 500 and 400 recommended Colgate? This matters. More to the point, you might want to know what question would a dentist be able to answer given a dentist's expertise.

[00:10:27] I've been going to a dentist all my life. My dentist has never asked me what toothpaste I use. He doesn't keep track. In order to know what toothpaste is best, you need to do one of these controlled studies we were talking about with echinacea where you give a bunch of people Colgate and you give other people Crest and other people Arm & Hammer and Gleem and Aim and Aquafresh and all the different toothpastes. And then you wait and see who develops the most cavities or you measure gingivitis or bad breath or whatever you're interested in. That's a controlled study. Without that, you don't really know. You'd need a medical researcher to do that. But dentists aren't running these kinds of studies that I know of. So I would actually add this as a separate category, a case of failed expertise or pseudo-expertise.

[00:11:09] Jordan Harbinger: Sure, right.

[00:11:10] Daniel J. Levitin: Somebody is pretending to be an expert and they're not.

[00:11:13] Jordan Harbinger: Five out of 10 of my dad's friends, don't recommend using smartphones, but I'm not going to listen to them because they don't know anything about technology or people who are my age and how we live and work, right? So failed expertise and definitely — and I got to just say, side note, I'm very impressed by your ability to rifle off so many different brands of toothpaste without even pausing. I don't think I could do that.

[00:11:33] Daniel J. Levitin: You should try me with breakfast cereals.

[00:11:36] Jordan Harbinger: Pseudo-expertise. Great topic. I'm really glad that you mentioned that. That's something that I feel like we fight a lot, both when we're watching the news here. And just on the show, pseudo-expertise is something that is — first of all, kind of a cancerous thing in society in general, certainly on the Internet and in business especially. In the business niche, I field questions about this all the time, "So-and-so's worth $600 million." Well, no, and no, and also no. And they told you that to sell you this product. And also that person has nothing to do with the field that they're selling you the product. I mean, there's so many things wrong with this. I would love for you to rip this one open.

[00:12:15] Daniel J. Levitin: Well, you're absolutely right. The fact is expertise has become increasingly narrow in the last 30 or 40 years. There's so much information that we've created as a society. By Google's own estimate, we've created as much information in the last five years as in all of human history before it. And so if you're a biologist or a cancer doctor or a specialist on Chinese art or a political pundit and economist, if you want to maintain a foothold of expertise in your area, it's going to tend to be narrow. You'd be hard-pressed to find somebody who is an expert in the law. They're going to be experts in constitutional law or torts or criminal law, and even within criminal law, they might be experts in murder, but not in robbery. Expertise tends to be narrow.

[00:13:01] So I find this most often irritating with scientists who start talking outside of their domain. This isn't just an intellectual problem. It can have very real practical consequences. I'm thinking of the story of Sally Ann Clark, who was a young woman in England, whose first baby died of what they called Sudden Infant Death Syndrome, SIDS. And then a few years later, she managed to become pregnant again, she gave birth to a second child and within a few months, that child had died. Well, a prosecutor tried her for double murder, claiming that the odds of two infants dying of that syndrome in the same household from the same mother were astronomically low. She must have murdered one or both of them. And they trotted out a pediatrician who testified for the Crown in England and said, "Yo, the statistics are unbelievably low. She must have murdered one of the kids."

[00:13:58] Well, let's take a step back now and think of this through the lens of expertise. Getting back to that conversation we had a moment ago about dentists, which toothpaste is the best for you to use because they're generally — most of them aren't keeping track. Ask yourself, is a pediatrician an expert on infant death?

[00:14:16] Jordan Harbinger: That's a good point. Not necessarily. They're experts in infant health. It sounds like they should be an expert in infant death, but really a coroner would be an expert.

[00:14:25] Daniel J. Levitin: There you go. You want to talk to somebody who has seen hundreds of infant deaths in his or her career, and if you're a competent pediatrician, you might only see one or two, hopefully. I mean, you know, infant death is relatively rare, fortunately. So the pediatrician messed up his statistics because he's not trained to think about infant death and that put this woman in prison.

[00:14:50] Jordan Harbinger: That's very tragic and it seems like the pediatrician should have known, but I would imagine he's thinking, "No, I've read several articles about this. I am an expert and I'm also a doctor."

[00:14:59] Daniel J. Levitin: Well, there's a conflict of interest. Like with the dentists who are recommending toothpaste, they don't actually benefit financially if you have good oral health. They make their money if you don't. And I'm not accusing dentists of having an ulterior motive, but you do have to worry about this kind of bias, at least, subconsciously. And of course, there are a few bad apples and in the pediatrician's case, he makes more money if he's an expert witness than if he's not. So there's an intrinsic bias there. As you say, you need a coroner or a medical examiner. Ultimately, Sally Ann Clark was exonerated and freed from prison, but she ended up serving three years first. And the whole experience was so horrible that she ended up committing suicide.

[00:15:40] Jordan Harbinger: Oh my god, that is terrible. Of course, because she lost two of her babies and then I would assume her marriage fell apart while she was in prison for murder.

[00:15:47] Daniel J. Levitin: I don't know about that. I do know that her husband stood by her and he believed her innocence.

[00:15:55] Jordan Harbinger: You're listening to The Jordan Harbinger Show with our guest Daniel Levitin. We'll be right back.

[00:16:00] This episode is sponsored in part by Seekr. We live in an age of information overload, and it's hard to tell what's true or false. As you know, from listening to this episode, I always tell you about how important it is to think critically. And we need to take steps to better evaluate news and get access to reliable information, which is why I want to tell you about Seekr. Seekr is like any other search engine except secret makes it easier for you to access reliable and better information by using AI and machine learning to give you transparency about what you consume online. So how it works is when you're about to click on an article, you'll see a Seekr score that tells you how reliable the information. Seekr evaluates the articles using best practice journalistic principles. So you know what you're getting yourself into before you even read the article. You can even adjust filters for what political lean preference you'd like. I find these features all really useful when I'm trying to access reliable information. That's not just clickbait or incoherence or rants, et cetera. Go to seekr.com to learn how you can make better decisions with access to better information. That's S-E-E-K-R.com.

[00:16:58] This episode is also sponsored by Bambee. As a small business, I remember running into the problem of trying to figure out HR ourselves, because hiring a full-time HR manager for 80K a year was just not a fit. Bambee allows you to outsource your HR without losing the human element. You actually get your own dedicated HR manager who can help you navigate the complex parts of HR and guide you to compliance. Use Bambee to create a company policy, workplace training, and employee feedback. I love that employees can take important and often mandatory training, right in Bambee, like sexual harassment, workplace safety, business ethics, and Bambee will report back to you on everyone's progress. This is all for as low as $99 a month. No hidden fees, cancel anytime. Bambee has received thousands of five-star reviews on Trustpilot and their customers are four times less likely to have a claim filed against them. You run your business, let Bambee run your HR.

[00:17:48] Jen Harbinger: Go to bambee.com/jordan right now for your free HR audit, spelled B-A-M-B-E-E.com/jordan, bambee.com/jordan.

[00:17:57] Jordan Harbinger: If you're wondering how I managed to book all these folks, it's about my network — and I know you don't have a podcast. You don't care about that — but I'm teaching you how to build your own network, whether it's for personal or professional reasons. I'm teaching you how to do it for free. It's our Six-Minute Networking course. It's over at jordanharbinger.com/course. The course is all about improving your connection skills and inspiring others to develop personal and professional relationships with you. It will make you a better networker, but it will also make you a better connector and a better thinker. That's at jordanharbinger.com/course. And by the way, most of the guests you hear on the show, they subscribe and contribute to the course. It's kind of a living, breathing thing. So come join us, you'll be in smart company.

[00:18:37] Now back to Daniel Levitin.

[00:18:40] One thing that seems to be in the media lately, that drives me bananas is this anti-vax crowd of not vaccinating your kids. And of course, now we end up with problems where healthy kids with parents who aren't knuckleheads are dying or getting measles because they have to go to school with somebody whose parents decided to read something on Infowars and now everybody's getting diseases that were eradicated when they got rid of pirates. Well, I guess, we still have pirates. So it's fitting that we still have measles, never mind. What's going on with these folks?

[00:19:10] Daniel J. Levitin: This is a hornet's nest. You and I both live near ground zero for the anti-vaxxers, which is Marin County, California. And I've been traveling around the country and I've run into these pockets of anti-vaxxers. And the interesting thing is they tend to be better educated than the average person. They tend to be relatively affluent. And they've somehow got it in their heads, that vaccines cause autism — the measles, mumps, rubella vaccine, in particular, MMR.

[00:19:41] It began with an article in a medical journal by Andrew Wakefield, not an expert on autism, but a physician in England who presented evidence that vaccines caused autism. Well, it turned out he admitted to fabricating data. His paper was retracted and he's been discredited and lost his medical license. But the story about this fake connection persists. And one of the things I can tell you as a neuroscientist is that once you come to hold a belief, it's very, very difficult to get you, to give it up. Your brain clings tenaciously to beliefs that it's held, even when the evidence has been found to be bogus or untrue.

[00:20:23] The additional problem is that we do see a correlation. In other words, parents who have kids with autism in a large number of cases did vaccinate their kids and then the autism showed up sometime after, but it turns out that's explainable. You can't give vaccines too early in a toddler's life, their immune system isn't ready for them. We give vaccines at a very precise point in the development of a child when they're ready. The other problem is that autism by definition is a developmental delay. It's not hitting your regular developmental milestones and it takes until a certain age before you notice that. You don't notice that your child isn't talking normally until after the age when he or she would be talking at all. So the problem is that the vaccines are almost always given before the autism, just because the time course of when the vaccine should be given is at a younger age than when you can even notice the autism. It doesn't mean that the one caused the other.

[00:21:24] Jordan Harbinger: Right. Of course. And the correlation and causation is another area, a great accidental or possibly deliberate segue I'd love to hear about. And I've seen this on television and I feel bad for these people. They say, "Look, I vaccinated my son and he got autism and I met another person with an autistic son and he had his kids vaccinated. So I'm not going to have the rest of my kids vaccinated." It's not totally illogical when you look at it like that, given the emotions in play and the consequences in play. But it's kind of like saying, "Well, be careful. Don't get your kid a driver's license because 99 percent of the people that drink and drive are people who have driver's licenses and are over of driving age." It's like, well, yeah, they do, because those are the people who are old enough to drive and are able to drive and know how to drive and are at the age where their friends and them are drinking. They don't do it when they're 11. They don't do either of those things generally when they're 11.

[00:22:17] So it doesn't necessarily mean that one causes the other, but can you give us some more concrete ways to think about this and to look at these problems critically, because I want to give people some tools here. I think these are very important to look at not only these claims and evaluate them differently, but any claim and evaluate them differently.

[00:22:35] Daniel J. Levitin: If you're not careful, your law school training is going to show through here.

[00:22:38] Jordan Harbinger: I know, oops. I thought I buried that.

[00:22:41] Daniel J. Levitin: Before we go to the correlation, causation in general, let's circle back to the autism-vaccine connection for a moment and invoke that principle of one of the earlier principles we were talking about of the control group. So it turns out that the way you would really know if vaccines cause autism is you take a bunch of kids at random and you'd give them vaccines and another bunch of kids at random, and you'd give them a sham vaccine. You know, you poke them with a needle, but not really give him anything. And you wait and see if they develop autism in equal numbers.

[00:23:13] Jordan Harbinger: I'm guessing that's not going to be allowed anywhere, any time soon.

[00:23:17] Daniel J. Levitin: It's unethical to do that. But as you pointed out, The experiment was in fact done in communities such as Marin County and some pockets in rural England where people just stopped vaccinating their kids. In those communities, we tended to see measles outbreaks, of course, because the kids don't have the measles vaccine. And they're not immune to it anymore and that can have terrible consequences. But more to the point, across a 10-year span in which vaccines were eliminated, autism rates remained the same.

[00:23:47] Jordan Harbinger: Ugh.

[00:23:48] Daniel J. Levitin: So it couldn't have been the vaccines causing the autism right here. You've got the same incidence of autism, even without the vaccines, but the anti-vaxxers still aren't buying it. And you know, I have to tip my hat to them because the instinct to question authority and to worry that maybe big pharma and the government have some profit motive. That's a cornerstone of critical thinking, of course. That very kind of questioning is what I'm proposing we need more of. The problem is with the follow-through. It's not enough to ask the questions. You have to then seek out evidence that will help support an answer to the question.

[00:24:25] Jordan Harbinger: I want to clarify. One point that you make early on in Weaponized Lies, which is that not knowing this stuff does not make us dumb. Can you expand that?

[00:24:34] Daniel J. Levitin: Well, what I'm trying to say is that these things are very, very hard and they mess up a lot of smart people. I worked for a decision-making scientist named Amos Tversky, who collaborated with Danny Kahneman. As you may know, Kahneman won the Nobel Prize. Tversky probably would have shared it with him, but Tversky passed and they don't award Nobels posthumously. But Kahneman and Tversky are responsible for a lot of this literature and what they showed is that even people with PhDs in statistics and medical doctors mess up on these kinds of thought problems and real-world problems all the time, because it is so hard. So not being able to think this way doesn't make you dumb, it's just that our brains weren't configured like this. And the silver lining is that if we work at it and we recognize our weaknesses, we can train ourselves to be better.

[00:25:25] Jordan Harbinger: I know Daniel Kahneman. I'm never going to get a Nobel Prize for anything, I would imagine, but I do have a decent professional education and I often have a very hard time with things like statistics, statistical thinking, wrapping my head around this stuff, and bear in mind, I was trained to think critically at a law school about topics, just like this. It just doesn't mean that I can do it all the time, especially when it comes to numbers and data and doing it in real-time while having a conversation. And it seems like our brains are actually evolved to use specific types of data and maybe not others. I mean, looking at visualizations and things like that, you mentioned in Weaponized Lies that's either. But even graphs and things like that can be used to manipulate data when people really want to do it.

[00:26:10] Daniel J. Levitin: They sure can. In some cases, as with verbal descriptions of things, the person drawing the graph has tried to put what over on you and in other cases, they just don't know better themselves. And I'm grateful to Fox News for supplying so many wonderful examples of misleading graphs. I reproduce them in the book like a pie chart where the different slices add up to more than 100 percent, which is completely nonsensical, right? You're dividing the pie into pieces or graphs that give you a visual impression. That's very different than the numbers in order to make you think that an effect is larger or smaller than it really is.

[00:26:48] If you see a graph or a chart or a diagram in the newspaper or on Facebook, or what have you, if the bar graph or the line graph has axes that aren't labeled, or if there are no numbers next to the tick marks, just ignore it because you could draw anything there if there are no numbers on it and it could be accurate, but you don't really know what the truth is.

[00:27:10] Jordan Harbinger: That is a little scary, right? Because I could imagine it's — for example, when I look at SEC filings, which I do as rarely as I have to, I look at things like these documents and we have to be really careful. I used to work on Wall Street with financial stuff. And if you think disclaimers are huge and insurance and things like that, you haven't seen nothing yet. Looking at SEC filing, they can't even use visuals in many cases because they're so accidentally misleading. So if I say something like, "I really think this is a good investment," and I show a random chart with, like you said, no axes labeled and there's just a line going up and it looks kind of like a graph — can't do it. The idea is I want you to sort of, maybe I'm lying by omission, letting you think that this is a graph of this stock or this security or this company's revenue, and it's going up. You can't do it. People have even gotten in hot water for things like company logos that look like graphs that go up. You just can't do it. We don't take care of ourselves in most other areas. The SEC is particularly cautious — well, in certain cases and reckless and others if you ask me, but these types of things are so accidentally misleading that we have rules against it.

[00:28:19] Daniel J. Levitin: But the rules don't seem to apply to television advertising, certainly not to Internet advertising. And just to make it a concrete example, say you're trying to sell something to somebody. It could be stock. It could be investors in your company, whatever you want to show that your profitability has gone up and you want as steep line as possible, right? Well, suppose that in a million dollars of sales last year, and then this year, you have a million dollars and one cent. Well, if you make a graph and you don't label the axes, you can have a very steep looking curve for that one cent if the little tick marks each represent a hundredth of a cent. Oh my goodness, look how high up it went. You just start the graph at a million dollars and you end the graph at a million dollars and 1 cent and you don't label anything. You could even lose money and have the graph appear to be going up if you have the negative numbers going upward, the positive numbers going downward. I've seen that too.

[00:29:16] Jordan Harbinger: Yeah. That's, of course, very scary. Because again, if we're not thinking actively about this, people are trying to reach our emotional brain using these visuals. And we're evolved to look for patterns. Patternicity is something that Michael Shermer talked about and I think Sam Harris talked about here on the show as well. We're really bad as humans at seeing patterns in text. But it is easier to get tricked by graphs and visuals, unlabeled axes like you mentioned. I like the idea that if you don't see labeled axes, take everything with a grain of salt and/or just ignore it because they are trying to trick you.

[00:29:49] Going back to the education level of people that, quote-unquote, "fall for this stuff," where and what role does the Dunning-Kruger effect play? Can you take us through—? First of all, what that is? It's one of my favorite rules. As long as we're making lists of rules, it's one of my top go-tos. Some people are so dumb. They think they're smart because they're like, "I don't see why we don't just build a wall because if there's a wall, then they can't run over the border." And it's like, the reason we haven't built a wall is because people who have more than three brain cells realize that immigrants aren't just walking across the Rio Grande. They're flying in on airplanes and then they never go home, you know? So it's like that kind of thing.

[00:30:25] Daniel J. Levitin: Yeah, I know this because there was a science article about it in the journal science. The problem with people who are ignorant is twofold. I mean, the first problem is that ignorance can lead to problems. But the second part is that they're ignorant, typically, of their own areas of ignorance. And so they're so sure that they're right, that they end up making either big mistakes or nonsensical pronouncements, which really makes a nice full circle with where we began our conversation.

[00:30:54] One of my favorite examples of this is actually it happened to me. I saw the movie The Big Short, as I imagine, many of our listeners did. I was struck by a quote in it, on one of the panels between scenes. "It ain't what you think you know that gets you into trouble. It's what you know for sure that ain't so."

[00:31:13] Jordan Harbinger: Right.

[00:31:14] Daniel J. Levitin: Tributed to Mark Twain. I remember also seeing it in Al Gore's film, An Inconvenient Truth, the identical quote. And so I thought, well, that's interesting. "It ain't what you think you know, it's what you know for sure that just ain't so." And so I put it in my book as an opening epigraph. Then after I submitted the book to the publisher, I had a month or so to track down all of the quotes and all of the articles and just, you know, make sure that everything was shipshape. And I could not find that quote in any of Mark Twain's writings at all. I went to the library and I looked in books of quotations. I did Internet searches.

[00:31:52] I finally called up a librarian, the English librarian at Vassar, Gretchen Lieb, because you know, librarians are really smart about this stuff. And they've got special training. You may not know this, but at universities, librarians hold a rank equivalent to professors. It's a very serious job with serious training. And I asked her for her help and using all of the resources that she had, she found no evidence that Twain ever said this, which is so deliciously really ironic because what it means is that both Al Gore's filmmakers and The Big Short filmmakers succumb to the very illusion that they're warning against, they were so sure that the quote came from Mark Twain. They didn't bother to check it out. It's what they knew for sure that just wasn't so.

[00:32:36] In fact, the librarian couldn't find the quote anywhere. And I think the reason that we all buy it is that it kind of sounds like something Mark Twain might say. It's got the word ain't in it. You know ain't so has the kind of ring to the way he would write. But if you look at the literature of that period, there was something close to the idea floated by Bret Harte and H.L. Mencken, two other American humorists. Also sounds like something Will Rogers might have said, but none of them actually said it. The first documented appearance of it is in the Al Gore film.

[00:33:11] Jordan Harbinger: This is The Jordan Harbinger Show with our guest Daniel Levitin. We'll be right back.

[00:33:16] This episode is sponsored in part by Better Help online therapy. You deserve to take care of yourself physically and mentally. Face it, life can be overwhelming. It's easy to get burned out without even knowing it. You might feel a lack of motivation or helplessness, detachment. You might feel trapped. You might just feel tired all the time. There's a host of other things that get in the way of you doing what you want to do. And some days, yeah, maybe you just don't feel like getting out of bed. I've got a fitness coach, a family, work I need to do. I managed to keep on going, but it's not always easy. Burnout is not always work-related. It's about living your life and Better Help online therapy is there to help prioritize you and your life. Talking with somebody can help you figure out what's causing stress in your life. Better Help is customized online therapy that offers video, phone, even live chat sessions with your therapist, and it's much more affordable than in-person therapy. Plus, you can get matched with a therapist in under 48 hours.

[00:34:07] Jen Harbinger: And our listeners get 10 percent off your first month of Better Help therapy. Just go to better-H-E-L-P.com/jordan to get started.

[00:34:15] Jordan Harbinger: This episode is also sponsored by Starbucks. The new Starbucks Baya Energy drink is created from caffeine naturally found in coffee fruit. It includes vitamin C, which is an antioxidant. So it's a great beverage to bring to a summer barbecue or a golf game or the beach, or even gardening in the backyard. Starbucks Baya Energy drink comes in three delicious fruity flavors, mango guava, raspberry lime, and my personal favorite, pineapple passion fruit. I like to drink mine with a little umbrella and I stick my pinky out when I drink it. It's a perfect pick-me-up when you're out and about on a summer day. Pack a Starbucks Baya Energy drink when you take your kids to the park. You drink it, not the kids. They got enough energy. Each 12-ounce 90-calorie can contains 160 milligrams of caffeine. It'll give you a refreshing fruit-flavored boost, a feel-good energy in a way only Starbucks can deliver.

[00:34:59] Jen Harbinger: Starbucks Baya Energy drink is available online, at grocery stores, convenience stores, and gas stations nationwide.

[00:35:05] Jordan Harbinger: This episode is also sponsored in part by Zelle. Zelle is a great way to send money to family and friends, no matter where they bank in the US. Cash, who needs it? Wallet, who carries one? I pretty much have everything I need on my phone. I send money with Zelle. It's fast. It's easy. Want to put the cost of a group dinner, chip in on a gift, send money to a friend who needs some help. Sending money with Zelle is super easy. And don't worry, you don't have to download another app. Zelle is probably already in your banking app since it's in over a thousand different banking apps. The money sent goes straight into the recipient's bank account, typically in minutes between enrolled users. And Zelle doesn't charge a fee to send or receive money. After you enroll with Zelle, you just need an email or phone number to send or receive money from your bank account. So look for Zelle in your banking app today.

[00:35:46] Thank you so much for listening to and supporting the show. You guys — really, you are the best fans. The sponsors love you. The team loves you. I really appreciate that many of you support those who make this show possible, and we put all the discount codes in the deals and the URLs on one page. jordanharbinger.com/deals is where you can find it. And you can search for any sponsor using the search box right on the website as well. Again, please consider supporting those who support us.

[00:36:13] Now for the rest of my conversation with Daniel Levitin.

[00:36:18] An exercise that I like to do when I look at things like correlation versus causation, or when I see examples of something that is a so-called rule, I try to think of ridiculous examples of that and see if it holds up. And one you gave in Weaponized Lies is really good. Nicholas Cage movies versus drownings. Can you take us through that scenario?

[00:36:38] Daniel J. Levitin: Yeah. So we're talking about things that correlate, but that doesn't necessarily mean one caused the other. Just to take an example, I made myself a cup of green tea about an hour ago, and then not long after the phone rang and there you were. I don't think that my making the cup of green tea caused you to call. And I don't think that one could make an argument that it did, but if in fact, every time you and I talk, I had a cup of green tea before I still don't want to conclude that one caused the other. And a guy named Tyler Vigen, Harvard Law School student, has a bunch of ridiculous examples to sort of put a finer point on it.

[00:37:15] And the idea is that the world is so complicated and there's so many things going on that if you look hard enough, you'll find things that co-vary. By that, I mean, this one increases and another thing increases with it and they both decrease according to the same pattern. And what he found is that year by year, the number of Nicholas Cage movies made correlates with the number of people who drown in swimming pools. My favorite example is that the number of people who die from getting entangled in their bedsheets is correlated with the per capita consumption of cheese. So, I suppose you could spin a story that people who want to die by strangulation in their bedsheets decide to have one last rich meal of cheese. And so they go out and buy a whole lot of it, or maybe people eat a whole bunch of cheese and they get into a dairy-induced stupor and end up strangling themselves. But more likely these two things are unrelated.

[00:38:11] Jordan Harbinger: To be fair, on the other side of the coin, I can see that there's plenty of people that might hear about another Nicholas Cage movie and just decide to end it. I can see that correlation.

[00:38:20] Daniel J. Levitin: Yes. Or, you know, Nick sees all these people drowning in pools and thinks, "I'm going to just back off making movies for a while."

[00:38:27] Jordan Harbinger: Yeah, exactly. Yeah. Nicholas, do us a favor, man. People are drowning themselves all over America. Give it a rest.

[00:38:34] The reason I brought that back up, despite having already covered causation and correlation is because I like to give practicals here for the show. And frankly, I think that looking at ridiculous examples of so-called rules to see if they still hold up works quite well. Another thing that I use all the time, wherever possible, especially when debating is to argue against your own point. And this comes from a book called The Five Elements of Effective Thinking. I'm not sure if you've seen it. Arguing against your own point in your own head or with someone else or during a discussion is a great way to find the holes in your argument and to see whether or not you're right. And to see whether or not there is another perspective that could be equally valid because generally when we're arguing something we've already made up our mind. But when we argue against our points, often enough, we can find possibly that we're wrong or at least find another angle on these things. And it looks like this holds true with your work, as well as in Weaponized Lies. You did mention that even sometimes statistics, as presented, can't be interpreted at all. They're just there.

[00:39:33] Daniel J. Levitin: Yeah. I think what you're talking about is very important. And of course, some members of society get this training in looking at the other side — lawyers, notably, scientists — but we don't all get the training and we would all benefit from it. It doesn't do you any good to try to talk yourself into something if you're only looking at half of the story or half of the evidence. That is if you want to make evidence-based decisions. And if I could put in a plug for evidence-based decisions, they are correlated with — we don't know that they cause it, but they're correlated with a host of better life outcomes.

[00:40:07] People who make evidence-based decision-making tend to make better decisions about their financial future, about their medical care. And so they tend to live longer and live happier lives. The difficulty here is that we tend to make decisions from an emotional place. And I'm the last person to deny the importance of emotions. I think they're very important, but we have to keep them at bay long enough to evaluate the evidence rationally and objectively and see where it goes. So yes, argue with yourself. What evidence would you need to contradict yourself? And is that evidence as solid? Is it as credible? Is it as powerful?

[00:40:42] Jordan Harbinger: Because it's not just people who say have low IQ and the Dunning-Kruger sort of effect into play here that are more easily manipulated. It's also people who can't control their emotions. And one of the reasons to control your emotions is not just to avoid embarrassing yourself, but to avoid convincing yourself that something is right or wrong because of the way that you feel about it before any way that you've actually been able to evaluate the facts before you've had a chance to ask yourself. Can we really know that? How can we know that? Is the person telling me this somebody who might know that? Or are they a pseudo-expert or are they an expert in something that is not this particular area? And these are all questions we need to ask ourselves. And that becomes very difficult if we're too busy being angry or worked up about whatever we're discussing at the time.

[00:41:30] Daniel J. Levitin: Yeah. One of the funniest illustrations of the Dunning-Kruger. Is the Jonah Ryan character and Veep is overconfident and doesn't know all kinds of things that he should know. So he doesn't know what regulations are. He just has these gut ideas about things. Like you were saying, build a wall doesn't solve the immigration problem because people are crossing over in other ways. The solution here again comes back to humility just because you think you can figure something out in your head, doesn't mean you're thinking of all the angles. And so a lot of what lawyers and business people and scientists do is sit around a table and brainstorm and try to generate alternative scenarios. "What might I be missing? Who could I call? That's an expert who could tell me what I might be missing?" because just generating stuff out of your own head can lead to a very biased one-sided view.

[00:42:25] Jordan Harbinger: So how do people use these types of concepts and these types of informational techniques for positive intent and negative intent? Because both are manipulative, right? But usually, we focus on negative intent. It seems like there have to be examples of this being used for good. Can you think of any?

[00:42:41] Daniel J. Levitin: Yeah, the government and businesses may deceive us for our own good, in some cases. One example that comes to mind is that if you've got a fire in a building with restricted exits and a lot of people in the building, you may tell people to leave, but not tell them how bad the fire is, because you don't want to cause a panic. You might be misleading them, right? about the danger, because it's in everybody's best interest for them to leave in an orderly fashion.

[00:43:09] I think for national security reasons, our government and military, don't always reveal to us everything that's going on. The police don't always tell you when they're about to close in on a subject. They don't announce on the radio, "Well, we're a block away from the house where we think the suspect is," because that would give the suspect notice to leave. And even if you were to interview a policeman approaching the house and say, "Where are you going? What are you going to do?" The policemen might lie because public safety is improved by being able to catch this person. But I'm sure there are other examples where we're being lied to and someone thinks it's for our own good but it really isn't. It's just their conception of what our own good is.

[00:43:47] Jordan Harbinger: Sure. So are you of the opinion that manipulation, no matter what is bad, even if it's for your own health?

[00:43:53] Daniel J. Levitin: Well, no, I'm not. I don't know how to sort this out. Other than that, it's something that we should be aware of and talk about. In medical schools, they teach classes in medical ethics, and this creates a poignant example. If you know that a person has only a 10 percent chance to live, but that the particular disease they have is affected by mood and emotion and brain chemistry, as many diseases are, is it ethical to tell them, "You're probably going to die," which could actually cause them to die because you put them into a depression? Or is it better to try and give them hope and kind of fudge the statistics because they really have a much better chance of pulling through if they've got that hope?

[00:44:37] These are ethical issues and there are no easy answers. You know, what if somebody says to their doctor, "Whatever happens, don't tell me if I'm going to die. I don't want to know. No matter what I say to you don't tell me. And here's a signed affidavit." And then a week later, they're on their death bed and they say to the doctor, "I forget what I said in that letter. I really do want to know." You could imagine cases where it's not so clear-cut. This is a very real case playing out in hospice care and old people's homes and hospitals. This comes up a lot of the time.

[00:45:07] Jordan Harbinger: Oh, I didn't realize that. I guess it does make sense. There's just something I never think about.

[00:45:11] Daniel J. Levitin: Certainly, the water supply might be contaminated in a way that doesn't really have any practical health benefits. And if you look at the water codes for many major American cities, they're not required to disclose certain violations if they don't have practical implications. So you might figure, "Well, no news is good news if I don't hear otherwise, my water is fine," but in fact, you know, they're allowed a certain number of contaminants and certain background levels of bad things, and they're not required to reveal them to you maybe because it would set off panic.

[00:45:41] Jordan Harbinger: That's pretty scary. That's really, actually not good at all especially coming from Michigan, where we had the Flint issue that was actually quite disgusting and covered up and was harmful.

[00:45:51] Daniel J. Levitin: Yeah. And speaking of disgusting, take a look at what the FDA regulations are for how many insect parts and how much rats' feces is allowed in strawberry jam.

[00:46:01] Jordan Harbinger: I'm really disturbed by the fact that they actually have regulations for that specifically because that alone illustrates the problem enough for me. Ugh, wow. I mean, insect parts, whatever that doesn't get to me, but the rest of it, yeah, I could take it or leave it. So many people out there in the media and corporations are indeed trying to trick us in one way or another. And I'm a firm believer that the way to counteract this isn't to simply trick people in the other direction, instead, I'm thankful for the opportunity to hear today to begin the process of starting to teach people how to read data so that they can educate themselves properly, make their own conclusions based on accurate facts and data, and accurate interpretations of raw data really is the enemy of propaganda and deception in many ways. Would you agree with that?

[00:46:47] Daniel J. Levitin: Absolutely. I think each of us has to take responsibility for doing a little bit of thinking on our own. It's just because the people who are trying to deceive us have become so facile in what they do, that the news media and the traditional gatekeepers of information, can't keep up with all the lies and distortions. So it takes a little bit of work on our part, but it's worth it.

[00:47:10] Jordan Harbinger: Thank you so much. There's a lot of good practicals in here. The book, of course, Weaponized Lies has many, many, many more. Look at the data. Look at what people are giving you. More importantly, look at what they're not giving you and ask yourself questions about what you're being presented. Those little tiny tips alone. We'll start to open up a whole hidden world that is frankly, a little uncomfortable, but very, very useful. And for those of you who are getting used to this type of critical thinking, I think you'll start to view things completely differently. Thank you so much, Daniel.

[00:47:39] Daniel J. Levitin: Thank you, Jordan.

[00:47:42] Jordan Harbinger: You're about to hear a preview of The Jordan Harbinger Show about how you can be affected by ransomware and cyber attacks on the rise now, all over the world.

[00:47:50] Nicole Perlroth: We still don't know just how the Russians are into our government systems. It's not as if we would see a Russian hacker inside the state department's network and they would scurry away. They would stay and fight to keep their access. And when I went and interviewed the guys who were brought onsite to remediate and get the Russians out of those systems, they said, "We'd never seen anything like it. It was like hand-to-hand digital combat." So it's going to be at least a year or more before we can stand up and confidently say we've eradicated Russian hackers from nuclear labs, the Department of Homeland Security, the Treasury, the Justice Department.

[00:48:31] And now, you're seeing ransomware attacks that are taking out pipelines and the food supply that just come down to a lack of two-factor authentication and bad password management. That's all it takes. How do you trust that any of the software you're using is secure and not a Russian Trojan horse? How do you respond to an attack aggressively when you yourself are so vulnerable?

[00:48:58] We live in the glassiest of glass houses that makes escalation, you know, that much more of a risk. So yeah, we might have sharper stones than other, but our adversaries can just come back and say, "Hey, they just blew up this pipeline," or, "Hey, they just turned off our lights." We're just going to go hit them. And then you get into this cycle of escalation. And that's what I worry about is the cycle of escalation. And I think we're getting close enough that I think we're going to see a cyber attack within the next four years even that causes substantial loss of life.

[00:49:30] Jordan Harbinger: For more with Nicole Perlroth on what the US should do to push back against cyber warfare, check out episode 542 on The Jordan Harbinger Show.

[00:49:41] Thank you once again to Daniel Levitin. The book will be linked in the show notes as all the materials from all the guests always are. Jordanharbinger.com is where you can find those as well as the course. Please use our website links if you buy the books from the guests. It does help support the show. Transcripts are in the show notes. Advertisers, deals, and discount codes, all at jordanharbinger.com/deals. Again, please consider supporting those who make this show possible. I'm at @JordanHarbinger on Twitter and Instagram, or just connect with me right on LinkedIn. I love talking with all of you. I'm teaching you how to connect with great people and manage relationships using our Six-Minute Networking course and the tactics therein. I'm teaching how to dig that well before you get thirsty. That's at jordanharbinger.com/course. And as you will see most of the guests on the show, subscribe and/or contribute to that same course. Come join us, you'll be in smart company.

[00:50:32] The show is created in association with PodcastOne. My team is Jen Harbinger, Jase Sanderson, Robert Fogarty, Millie Ocampo, Ian Baird, Josh Ballard, and Gabriel Mizrahi. And finally, remember, we rise by lifting others. The fee for this show is that you share it with friends when you find something useful or interesting — you know a science geek, you wouldn't even be listening to this, share this episode with them, or if you know somebody who needs to make better decisions with the information they consume, this is a good one for them as well. The greatest compliment you can give us is to share the show with those you care about. In the meantime, do your best to apply what you hear on this show, so you can live what you listen, and we'll see you next time.

Sign up to receive email updates

Enter your name and email address below and I'll send you periodic updates about the podcast.