How has artificial intelligence (AI) become the 21st century’s greatest dilemma? Microsoft AI CEO and The Coming Wave author Mustafa Suleyman weighs in!

What We Discuss with Mustafa Suleyman:

- A deep dive into the evolution of AI and understanding how AI learns and predicts.

- AI’s potential impact on everything from everyday jobs to gaming to national security to solving global challenges.

- The ethical considerations of allowing AI to replace humans in the workforce — particularly as it gets more sophisticated and more capable of taking on more complex tasks.

- How AI will shape the course of the arms race between the superpowers.

- The critical need for responsible innovation, international safety standards, and cooperative governance to harness AI’s benefits while mitigating its threats.

- And much more…

Like this show? Please leave us a review here — even one sentence helps! Consider including your Twitter handle so we can thank you personally!

On this episode, we’re joined by Mustafa Suleyman, Microsoft AI CEO, Inflection co-founder, and co-author of The Coming Wave: Technology, Power, and the 21st Century’s Greatest Dilemma. Here, we discuss how we got to where we are today in the field of AI, what’s already on the table, and what’s in the cards for our AI-enhanced future — the good, the bad, and the ugly — and what we can do to avoid being drowned by this coming wave. Listen, learn, and enjoy!

Please Scroll Down for Featured Resources and Transcript!

Please note that some links on this page (books, movies, music, etc.) lead to affiliate programs for which The Jordan Harbinger Show receives compensation. It’s just one of the ways we keep the lights on around here. We appreciate your support!

Sign up for Six-Minute Networking — our free networking and relationship development mini-course — at jordanharbinger.com/course!

Subscribe to our once-a-week Wee Bit Wiser newsletter today and start filling your Wednesdays with wisdom!

This Episode Is Sponsored By:

- Nissan: Find out more at nissanusa.com or your local Nissan dealer

- AG1: Visit drinkag1.com/jordan for a free one-year supply of vitamin D and five free travel packs with your first purchase

- Shopify: Go to shopify.com/jordan for a free trial and enjoy three months of Shopify for $1/month on select plans

- BetterHelp: Get 10% off your first month at betterhelp.com/jordan

- The Dr. Drew Podcast: Listen here or wherever you find fine podcasts!

Miss our conversation with transformative technology specialist Nina Schick about the effect deepfakes will have in a world where facts don’t matter as much as they once did? Listen to episode 486: Nina Schick | Deepfakes and the Coming Infocalypse here!

Thanks, Mustafa Suleyman!

If you enjoyed this session with Mustafa Suleyman, let him know by clicking on the link below and sending him a quick shout out at Twitter:

Click here to thank Mustafa Suleyman at Twitter!

Click here to let Jordan know about your number one takeaway from this episode!

And if you want us to answer your questions on one of our upcoming weekly Feedback Friday episodes, drop us a line at friday@jordanharbinger.com.

Resources from This Episode:

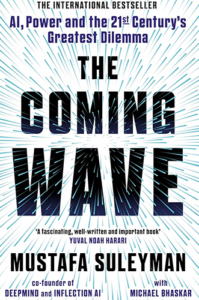

- The Coming Wave: Technology, Power, and the 21st Century’s Greatest Dilemma by Mustafa Suleyman and Michael Bhaskar | Amazon

- Mustafa Suleyman | Website

- Mustafa Suleyman | Twitter

- Mustafa Suleyman | LinkedIn

- Microsoft Taps DeepMind Co-Founder Suleyman to Spearhead Consumer AI Push | Reuters

- Who is Mustafa Suleyman? Meet Microsoft’s New AI Expert | The National

- Swathe Definition and Meaning | Merriam-Webster

- How TikTok Reads Your Mind | The New York Times

- Socrates on the Forgetfulness That Comes with Writing | New Learning Online

- Are Google and Smartphones Degrading Our Memories? | Harvard Gazette

- Kevin Kelly: AI Electrified | Dubai Future Talks

- What is AI? | Professor John McCarthy, Stanford

- The Terminator | Prime Video

- Nina Schick | Deepfakes and the Coming Infocalypse | Jordan Harbinger

- Marc Andreessen | Exploring the Power, Peril, and Potential of AI | Jordan Harbinger

- AI Will Create ‘A Serious Number of Losers,’ Warns DeepMind Co-Founder | Financial Times

- The First Emotionally Intelligent AI | Pi

- Generative AI and the Future of Content Creation | Forbes

- What is Moore’s Law? | HubSpot Marketing

- ‘I Hope I’m Wrong’: The Co-Founder of DeepMind on How AI Threatens to Reshape Life as We Know It | The Guardian

- Building Blocks for AI Governance by Bremmer and Suleyman | IMF

- Mustafa Suleyman: Containment for AI | Foreign Affairs

- Massively Parallel Methods for Deep Reinforcement Learning | Papers With Code

- DeepMind: Inside Google’s Groundbreaking Artificial Intelligence Startup | Wired

- Intelligence Explosion FAQ | Machine Intelligence Research Institute

- 4.7 Million Reasons Why Nvidia’s Artificial Intelligence (AI) Chip Dominance Will Be Nearly Impossible to Topple | The Motley Fool

- Meet Sharon Zhou, the AI Founder Doing Just Fine Without Nvidia’s Chips | Business Insider

- Artificial General Intelligence or AGI: A Very Short History | Forbes

- A Race to Extinction: How Great Power Competition Is Making Artificial Intelligence Existentially Dangerous | Harvard International Review

- Chris Miller | Chip War: The Battle for Semiconductor Supremacy | Jordan Harbinger

- The Turing Test | Stanford Encyclopedia of Philosophy

- Google’s AI Passed the Turing Test — And Showed How It’s Broken | The Washington Post

- Mustafa Suleyman: My New Turing Test Would See if AI Can Make $1 Million | MIT Technology Review

972: Mustafa Suleyman | Navigating the 21st Century’s Greatest Dilemma

This transcript is yet untouched by human hands. Please proceed with caution as we sort through what the robots have given us. We appreciate your patience!

[00:00:00] Jordan Harbinger: This episode of the Jordan Harbinger Show is brought to you by Nissan. Nissan SUVs. Have the capabilities to take your adventure to the next level. Learn more at nissanusa.com.

[00:00:08] Coming up next on the Jordan Harbinger Show.

[00:00:11] Mustafa Suleyman: The way that we trust one another is that we observe what you say and what you do.

[00:00:17] And if what you say and what you do is consistent, then over time we build up trust. And that's the behaviorist model of psychology. And I think that, practically speaking, that's gonna be the standard to which we hold a lot of these ais for the, you know, foreseeable future.

[00:00:37] Jordan Harbinger: Welcome to the show. I'm Jordan Harbinger. On the Jordan Harbinger Show. We decode the stories, secrets, and skills of the world's most fascinating people and turn their wisdom into practical advice that you can use to impact your own life and those around you. Our mission is to help you become a better informed, more critical thinker through long form conversations with a variety of amazing folks, from spies to CEOs, athletes, authors, thinkers, performers, even the occasional organized crime figure, mafia, enforcer, former jihadi, astronaut, hacker, or special operator.

[00:01:07] And if you're new to the show or you wanna tell your friends about the show, and I always appreciate it when you do that, I suggest our episode starter packs. These are collections of our favorite episodes on persuasion and negotiation, psychology, geopolitics, disinformation, cyber warfare, AI crime, and cults and more.

[00:01:22] That'll help new listeners get a taste of everything we do here on the show. Just visit Jordan harbinger.com/start or search for us in your Spotify app to get started. My guest today is Mustafa Suleman, on the forefront of ai, having been in the game so deep and for such a long time. Mustafa is the CEO of Microsoft AI and the co-founder and former head of Applied AI at DeepMind, which is an AI company that was acquired by Google, if you're sort of outta the loop on that.

[00:01:47] He's since co-founded Inflection ai, a machine learning and generative AI company, which I've used and is quite impressive as well. Mustafa is a deep thinker. He really knows AI's capabilities inside and out. Our conversation today takes us from AI and surveillance through AI in the workplace, how and why China is currently ahead of the US in the race to create something called artificial general intelligence.

[00:02:09] That's the thing that's perhaps both most exciting and most terrifying with respect to ai. This is artificial general intelligence. We'll define it on the show. This is what everybody thinks of when they watch movies and they're like, that's ai, as opposed to what AI actually is right now. But we are.

[00:02:22] It's getting there and Mustafa's just a brilliant guy. This conversation actually flew by for me, so I know you're going to enjoy it as well. Even if you're kind of not in the loop on the AI stuff or if you're neck deep in it, you'll dig this as well. Now here we go with Mustafa Suleman,

[00:02:41] I have to say, your book really puts AI into, I hate saying things like, like this puts it into perspective, but it really does put it AI into a unique perspective because it's clearly not a book where someone who wants to be relevant is like, let me throw some buzz words. AI is really trending right now.

[00:02:57] Let's do something on that. You've really been immersed in this field and the repercussions of what it, this is gonna bring to us on a global level in a super deep way. So I'm excited to have this conversation. I think it's gonna be fun.

[00:03:07] Mustafa Suleyman: Thank you. I, I really appreciate that. I mean, it's been almost 15 years in the field now, thinking about the consequences of AI and trying to build it so that it delivers on the upsides and it's just a surreal time to be alive.

[00:03:19] I mean, it, it's just been such a privilege to be creating and making and building at a time like this. Mm-Hmm. You know, for 10 years. In AI development, the curve was pretty flat. We were doing some cool things. There were some research demos. We played a bunch of games really well, but now we've really crossed this moment, this threshold, where now computers can increasingly talk our language, and that is just mind blowing.

[00:03:46] I think people are still not fully absorbing how completely nuts that is and what it means when every single one of your devices that you have now, your tablets, your screens, your cars, your fridges, those are going to become conversational endpoints. Mm-hmm. They're going to talk to you about everything you are trying to get done, the things you believed, things that you like, what you're afraid of.

[00:04:09] They're gonna come alive in a sense. I don't like saying alive loosely, but they're really gonna start to feel much more animated in your life. And I just think that's gonna change what it means to be human. It's gonna change society in a very fundamental way. It'll change work and so on. So it's, it's just a crazy time to be alive.

[00:04:25] Thinking

[00:04:25] Jordan Harbinger: about what you have just said, there's gonna be this huge sweat of people that suave swath. I never know how to pronounce that word now that I think about it. I've only read it. There's gonna be this huge,

[00:04:34] Mustafa Suleyman: do you know, I have to say, I don't dunno. I was just about to say, I do not know suave. I say suave SW sounds better, but I think the American version is swath.

[00:04:42] Jordan Harbinger: Swath also sounds right. See, we're not gonna solve this right now. I'm not even gonna look it up. I'm just gonna say swath. There's gonna be this huge swath of people that actually never realize how amazing that whole thing is, and also how it works, right? That Venn diagram's never really gonna become a circle because my kids are gonna be like.

[00:04:58] Of course you can talk to the refrigerator about a problem you're having at school or, or whatever. And I'm gonna go, wow, I can't believe I'm talking to my refrigerator. Like I'm sitting there, it tells me that broccoli's about to expire. And I'm like, am I wasting my time doing this? And it gives me like a reason to pin and that's gonna be amazing, but I'm not necessarily going to understand exactly how that's happening.

[00:05:19] Whereas my son might be like, obviously it's just got an LLM built into it and it connects with Amazon's cloud servers or whatever, and you're just like, okay, fine. But to him it's not that amazing because he, he'll have grown up essentially with this being like electric. Like I wasn't amazed when I saw my parents turn a light on in the bedroom.

[00:05:34] It was just a thing that was ubiquitous by that

[00:05:38] Mustafa Suleyman: time. Totally. I mean, is all of this is becoming second nature so quickly It's sort of mind blowing. I mean, I, I have to say, I even periodically find myself picking up a regular magazine and then wanting to like pinch and zoom on some of the text. Yeah. You know, or just like swipe over.

[00:05:53] I've done that a few times at. I think it's odd, you know, we don't fully appreciate how quickly things are happening, but also how quickly we are changing, right? On the one hand, it feels super scary and amorphous and hard to define and we dunno what the consequences are. And the next thing, you look round and everybody has a phone, with a camera, with a listening device that enables you to video with somebody on the other side of the world that can stream content left, right, and center.

[00:06:17] I mean, if I described to you 30 years ago, a world in which pretty much every one of us in the developed world are gonna have a laptop. Mm-Hmm. A desktop, probably a tablet. Certainly a phone that our TV would have a camera on the end of it. And all of those are gonna be listening devices and video devices.

[00:06:35] You would think that I was a crazy dystopian, you know, scary sci-fi addict. Mm-Hmm. But actually that has happened seamlessly, naturally, and actually without huge consequence like, you know, yes. There are downsides and for sure, you know, we have to be conscious of those, learn the lessons of those and really talk openly about them and not belittle them.

[00:06:55] At the same time, the world is clearly smarter, more engaged, more connected, way more productive. We all have much more access to information that's hugely democratizing. Mm-hmm, and hugely liberating. It happens more seamlessly and in a kind of more profound way than we're ever able to imagine ahead of time.

[00:07:13] Jordan Harbinger: You're right, you're onto something here. Of course. I mean, having thought quite a bit about this yourself in preparation for the book, the mobile phone, and man, the laptop thing you mentioned at first, that happened so fast. I remember, 'cause I was in law school and it was like one year where I graduated from college.

[00:07:27] Nobody had a laptop in class. Maybe one person in a class of two or 300 people would have a laptop. And you're like, that guy is kind of weird. But I guess he's really organized or he is really into computers or something. And he'd be typing and his battery would run out 45 minutes into class. 'cause that's how long batteries lasted on laptops at that time or whatever.

[00:07:44] And then I went to teach English abroad in former Yugoslavia for a year. And I came back and in law school the first year, this is bear in mind, like a year and change later, 80% of people had laptops. And there were some older people who were like, I'm not gonna type things out. That's ridiculous. And then the next year, all of those people had given in and they're like, it's just easier man.

[00:08:04] I can search for things. I don't have to look through this note. But there was maybe like one person. Handwriting notes and that person was like, I can't focus when I have the laptop and I'm not paying attention in class. And I was like, oh, that's actually really smart. Yeah, I should probably do that too.

[00:08:17] Didn't take that advice. Should have done it. Would've learned more about the law, but it changed almost overnight in terms of the number of years. And with the iPhone as well, like. All my Blackberry friends at the law firm were like, oh, I'm never getting one of those. I already have this Blackberry. It has brick breaker on it.

[00:08:32] It has a keyboard. I'm not gonna use a touch screen. I got a keyboard. I need a keyboard. And one or two years later they were like, have you seen this? This has apps on it. It's unbelievable. And I'm like, yeah, I told you. And nobody went back. Right. Nobody, even my dad is addicted to his damn phone.

[00:08:46] Mustafa Suleyman: Well, and people also say, you know, of course it's gonna make you dumber.

[00:08:49] Right. It's gonna make you lazy. Yeah. You know, you'll forget how to write. And I mean, I probably have forgotten how to hand write, to be quite honest with you. Yeah. You might got that one. That's pretty awful. Yeah. Although, I mean, doctors write all day and their handwriting's the worst. Yeah. Isn't it true?

[00:09:01] So I dunno what that's about, but they said that about calculators, they said that about, you know, phones, it makes us more lazy. It makes us less connected. You know? I think that's partly true, sort of, but it's also a connection in a new kind of way. Mm-Hmm. I'm a huge fan of TikTok. I actually love it, you know?

[00:09:17] Yes. Do I get addicted to it periodically? Absolutely. Do I need to take a break from it? It's a kind of strange relationship, but at the same time, it gives me access to an unbelievable amount of content that is so obscure and strange and detailed and subtle, and it's just mind blowing to see people who never would've thought of themselves as quote unquote creators, right?

[00:09:39] They didn't go to drama school, you know, they're not art directors, they haven't been studying film all their lives. They've just suddenly been given this tool. And whether it's like harmonizing with the air conditioning unit, or filming a beautiful frog, or doing a silly dance, or whatever it is they do, like, there's just this massive range of creativity and output.

[00:10:01] I think that, you know, sort of important to not downgrade or diminish how beautiful it is to see billions of people have access to knowledge and tools to be creative and productive because it is incredible so far, it hasn't made us dumber, it hasn't made us slower, it hasn't made us more disconnected.

[00:10:21] You know, we should be alert to those risks, no question. Mm-Hmm. But I think we're trending in a pretty good

[00:10:26] Jordan Harbinger: direction. Yeah. Was it Aristotle or Socrates? I always get these guys confused with this particular statement had said books are gonna be bad because nobody's gonna memorize information anymore.

[00:10:37] And that was the basis for being alerted person back then. And I think it was Socrates and it was like, don't write anything down. That's the end of the civilization as we know it, because you're supposed to have this stuff in your brain where it mixes with other ideas. And he has kind of a point there.

[00:10:50] But it's like, that doesn't mean you can't have it in a book too. So yeah, the technophobic attitude is always gonna be there. It also sounds like, and look, Kevin Kelly has said this. He said AI is gonna change the world more than electricity did. Do you think that's

[00:11:05] Mustafa Suleyman: accurate without question? Mm-Hmm. ai, it's even hard to describe AI as a technology.

[00:11:12] We are a technological species. Mm-Hmm. From the beginning of time we have been trying to create shelter use stone tools. Do needle work to create fabrics. We have been manipulating the environment to reduce our suffering, and that is the purpose of a tool. But a tool has always been inanimate, right? It can only ever do precisely what you instruct it to do.

[00:11:35] I mean, you may instruct it with your hands, you know, less language, but it's been an engineering output of our activity. Whereas now, I think the profound shift that we are going through is that we're sort of giving rise to these, you know, this new phenomena that I hesitate to call the tool because it has these amazing properties to be able to create and produce and invent way beyond and disconnected to what we've actually directed it to do.

[00:12:02] When you say, write me a poem and it produces a poem, are you really the tool user in that setting? Mm-Hmm. You've maybe framed the poem with a, a zebra and, you know, a French classical style and a about its relationship to a check shirt. You've asked the connect three random concepts, but really the power is in the production of this output.

[00:12:21] Yeah. And in time, you know, these are gonna get more autonomous, they're gonna have more and more agency, we're gonna give them more freedom to operate. And you know, people will even design them to have their own goals and their own drives. The kind of fundamental quality of this new phenomena, or this design material feels to me quite different to the engineering of steam or electricity or the printing press.

[00:12:47] Jordan Harbinger: Okay, that makes a lot of sense. But one thing kind of tripped me up here was you said it would, you could program it to have its own gold. That's where it gets a little bit scary, right? Because a goal in a human, it evolves, or in an intelligent being, I should say evolves. 'cause I'm sure maybe a goal for a dog evolves too, as it satiates hunger or whatever.

[00:13:04] I don't know. We're getting philosophical here, but that could be kind of bad news, right? And that's the plot of every sort of dystopian ai sci-Fi movie from Terminator to, I don't know, whatever. The AI is supposed to protect peace on earth and it's like, oh, the problem are humans. They're the ones causing all the words.

[00:13:20] Lemme just get rid of those folks. And it's like, I get that. It's great that goals can evolve but controlling this tech, and we will get to this in a bit, your ideas for how to control it or contain it, it just seems impossible 'cause we're essentially designing it. Maybe not to be able to be limited in

[00:13:35] Mustafa Suleyman: that way.

[00:13:36] Yeah, and I think the crucial words there is we are designing it. Like who is that we, you know, we kind of implies that you could like point at a specific lab or a government department or a specific company. And obviously all of those actors are involved in making AI and experimenting with this new tool and technology.

[00:13:57] But the truth is that there's this massive morass of. Billions now, or millions of developers who all have their own motivations and incentives, who are all experimenting in different ways. Most of this is open source software. It's all happening, you know, in many, many different locations. Mm-Hmm. And so there isn't really a coordinated, centralized week.

[00:14:18] And I think that's the first big thing that we have to wrap our heads around if we're gonna think about how we contain it, is that actually this is a very distributed set of incentives, driving forward creation. I think the thing that I am most concerned about, touching on what you've said, is that it is gonna be possible to give these things goals.

[00:14:38] It is gonna be possible to give these things more autonomy. It is gonna be possible to design them so that they self-improve those three capabilities will be pretty dangerous. You know, for sure. It is going to be increasing the level of risk because a system can wander off, come up with its own plans instead of following your plans.

[00:14:58] Right. What we have to start to think about is how we coordinate as a species over the next 20, 30, 40 years, because these capabilities will arise. There's no putting the capabilities back in the box. We have to decide what we don't think is acceptable, where the risk level is too much, and what has to be off limits.

[00:15:16] Just by the way, as we do with many, many other technologies, I mean, you know, you, you can't just like get a plane and fly it around downtown Seattle. You can't fly a drone around. You can't drive a car in a way that violates the highway code. You can't drive a tank down the street, even though you can buy one privately.

[00:15:34] There are rules everywhere about everything. So we've done this before and we can do it again. It's just that each time we create these new rules. It's significantly different in important ways, and that's what feels scary and unprecedented about it. Different to what's come before. And of course, this is very different.

[00:15:51] This has this kind of semi lifelike or digital person like characteristics, and that does feel pretty sci-fi. It's gonna be a very strange time.

[00:16:00] Jordan Harbinger: We've done some episodes on AI in the past, and people are worried about a surveillance state that might come as a result of it, or backlash in some other way.

[00:16:08] Misinformation, running rampant, deep fakes and things like that, which I'm sure we'll touch on later in the episode. But one that comes up all the time that I think is more relatable or likely is this mass unemployment idea. And that seems like more likely than, I don't know, total annihilation by Skynet in the past.

[00:16:28] Mark Andreessen said this on the, on the show, he said in the past, Hey, technology gets rid of jobs, but then it creates other jobs and there might be a lag, but it, it's happening so fast with AI that I'm not. Really sure if jobs will be created at the same rate or a similar rate as AI makes them obsolete because AI is developing so fast.

[00:16:49] So, I don't know. I'm curious what you think. It just seems like AI is developing on a curve that is, is so fast, especially as AI learns to develop itself, that a lawyer isn't just gonna be like, oh, well now that I don't have to do legal research anymore, I'm just gonna do this totally different thing. And then that gets taken up a year later or six months later, the guy's just gonna retire.

[00:17:07] Mustafa Suleyman: Yeah, I completely agree. I mean, I, I think that the thing that sort of people don't pay enough attention to is that just because it's happened in the past doesn't mean it's gonna happen in the future. Like it's so, that's such a simple line of reasoning. I mean, people always often say like, like I guess Mark Andreessen, that we've always created new jobs.

[00:17:26] Well, in order for you to believe that, you have to make the argument today that the very thing that is disrupting existing jobs. Is not going to do the new work that is supposedly created as well. Mm-Hmm. If you are a knowledge worker and a, or a lawyer or you know, you work as a project manager or you just do a regular job using a computer for most of your day and use Zoom and you send emails like these ais are gonna be able to do those tasks very, very cheaply.

[00:17:56] Quite accurately. And you know, 24 7, and then you have to ask yourself, okay, so what incentive do companies have to keep people in work versus. Use this cheaper thing to kind of replace them. And it's pretty obvious that like the shareholder incentive is gonna say, well, we might be able to make a lot more money if we could cut out this labor.

[00:18:18] Then you have to say, okay, well what is this new type of work that is gonna come, which ais won't be able to do? And how do we fund it? That's not a stupid point. Like, I mean, that's pretty reasonable. Like maybe we could start to properly fund healthcare workers. Mm-Hmm. Maybe we could properly fund and pay for education, right?

[00:18:36] Maybe we could properly fund elderly care and home help or community work, physical things in the real world that, you know, aren't gonna be, you know, naturally what AI can do in the next few decades. Because AI is mostly gonna target white collar work. And that's again, I think, surprising to people because the narrative from SCI-Fi and from the last few years has been, well, you know, the robots are coming for the manufacturing jobs.

[00:19:01] Right? Absolutely not. Right? It's just, you know, robots are a long way behind. What's actually gonna happen is knowledge workers that work in a big bureaucracy who, you know, spend most of their time doing payroll or administration, or supply chain management or accounting or paralegal work, these kinds of things.

[00:19:20] You know, I think we're already seeing it in the last 12 months or so are gonna be the first to be displaced. And that just leaves a question for society, which is just like, what do we do with that? That's great value. The question is, who captures that value and how is it redistributed?

[00:19:35] Jordan Harbinger: It's fascinating.

[00:19:36] One of the biggest plot twists, uh, I think of my life in terms of tech is seeing now that robots are coming much later than robotic brains or artificial brains. Like I, I think we were kind of all raised to be like, oh man. Eventually a robot's gonna do this, a robot's gonna do that. Nope. We still need the guy who unloads the truck.

[00:19:55] We just don't need the CEO of the company or whatever anymore. Like that guy, the legal department is now useless. The accounting department is now useless. Pretty much everybody in that skyscraper the company bought is mostly redundant because now we have a box somewhere in the cloud or you know, an Amazon data center that does all that.

[00:20:11] We still need the entire network of people that are driving and bringing the package to your door. Like those people are fine. It's just a really big, kind of upside down apple cart. Yeah,

[00:20:21] Mustafa Suleyman: it's totally the opposite of what SCI-Fi predicted, which, you know, is a good reason to not take anything for granted and not just assume that we're gonna create new jobs or that the narratives of the past are actually the what's gonna happen in the future.

[00:20:34] It's unprecedented. And so you have to evaluate the technology trends in its own right. For its own reasons. Right. And I think when you actually look at the substance of it, AI's use the same tools that we use to do our work. Right? They use browsers. They'll be able to navigate using a mouse and a keyboard effectively in the backend using APIs.

[00:20:54] And they can process the images, right? So they can just read the screen of what is on, you know, your desktop or inside of your webpage and they can now write emails and send emails and negotiate contracts and design blueprints and produce entire, you know, spreadsheets and slide decks and write the contracts.

[00:21:13] Those skills combined are what most of us do day to day for our regular jobs in kind of white collar work. And so that's what we're gonna have to confront over the next decade or two. It's quite

[00:21:24] Jordan Harbinger: fascinating how quickly this is all happening and, and unfortunately the head in the sand approach seems to be kind of the policy among people.

[00:21:32] And in the book you say something along the lines of humans are reacting, like, ah, waves are everywhere in human life. This is just the, the latest wave. You know, we had the wave of this, we had the wave of that. The internet came, everyone said Y 2K, nothing happened. We're still computerized. The internet's great.

[00:21:47] Why is this wave with the AI wave, why is this different? You know, why isn't this the exact sort of same worry slash fearmongering slash fear the unknown that everything else has been in the past? Yeah,

[00:22:00] Mustafa Suleyman: that's a great question, and I think that the first thing to say is that the results are self-evident.

[00:22:07] In this case, you can actually now talk to a computer. There's no programming required, you know, you can actually get it to produce novel images. These are the kind of funny thing is that people said, well, OkayIs are never gonna be creative, right? AI will be able to do raw based math. Do you remember that?

[00:22:27] That was only a couple years ago that people said AI will never be, yeah. I mean, times change so fast, right? And now you look at a piece of music that an AI's produced, or you look at one of these image generators and it's like stunningly creative, and now obviously producing real time video as well. So it's pretty clear that AI are quote unquote creative.

[00:22:47] Then people always used to say, Wellis will never be able to do empathy and compassion and kindness and human-like conversation. You know, that's always gonna be the preserve of human to human touch. Well, actually, it's self-evident. The results speak for themselves. Like if you look at our AI pie, for example, that we make an inflection is unbelievably fluent and smooth and friendly and conversational.

[00:23:10] I mean, it's like chatting to a human and many people find it better than speaking to a human. It doesn't judge you. It's always available. Yeah. You know, it's kind and supportive. I think that that's the first reason, is that you can actually see the power of these models in practice. And then the second thing is just the rate of improvement is kind of incredible.

[00:23:30] And what's driving this rate of improvement is training these large scale models and what we've seen over the last 10 orders of magnitude of computation. So 10 times, 10 times 10, 10 times in a row of adding more computers to train these large models. Is that with each order of magnitude, you get better results, right?

[00:23:50] The image quality is better, the speech recognition is better, the language translation is better, the transcription is better, the language generation is better. You can clearly see that this curve has been very predictable and over the next sort of five to 10 years, you know, many labs are gonna add orders of magnitude 10 x, 10 x, 10 x per year.

[00:24:11] And so I think it's quite reasonable to predict that there's gonna be a new set of capabilities beyond just understanding images and video and text. AI are gonna be able to take actions, they're gonna be able to use APIs, they're gonna be able to predict and plan over extended time sequences. And so I think that's why we are all predicting that this time is different.

[00:24:33] Jordan Harbinger: This is the Jordan Harbinger Show with our guest, Mustafa Suleman. We'll be right back. This episode is sponsored in part by AG One, taking care of your health. Not always easy, but at least it should be simple. That's why for the last decade or so, I've been drinking a G one pretty much every day. It's just one scoop mixed in water once a day.

[00:24:47] Every day is part of my morning ritual that keeps my brain firing on all cylinders crucial. When my podcast guests are usually smarter than me by a fair margin. I mean, staying on my toes is a must, especially when I'm up against some of the greatest minds around here, such as our guest today, Mustafa Soleman.

[00:25:02] That's because each serving of ag one delivers my dose of vitamins, minerals, pre and probiotics and more, and there's AI in there. No, I'm kidding. It's a powerful, healthy habit that's also powerfully simple. And more importantly, it's just really easy to get her done. Every batch of a G one undergoes stringent testing and is NSF certified.

[00:25:17] I know a lot of people are like, you don't know what's in there. NSF certified is, those are the things that I rock on the show. Otherwise, God is just Chinese sawdust. For all we know, NSF certified means no harmful contaminants, no banned substances.

[00:25:28] Mustafa Suleyman: If there's one product we had to recommend to elevate your health, it's a G one and that's why we've partnered with them for so long.

[00:25:34] So if you wanna take ownership of your health, start with a G one, try a G one and get a free one year supply vitamin D three, K two, and five free A one travel packs with your first purchase@drinkagone.com slash Jordan. That's drink ag one.com/jordan and

[00:25:49] Jordan Harbinger: check it out. This episode is also sponsored by Shopify.

[00:25:52] Imagine you and Shopify are the modern day Armstrong and Aldrin blasting off into the business cosmos together. It's not just about exploring, it's about setting the pace for what's next. For your venture, think of Shopify as more than a marketplace. It's your business, BFF, right there with you from the stroll.

[00:26:06] Your first sale in the buzz of opening your shop to the milestone of shipping out your millionth order. Got something awesome you're itching to sell. Whether it's the coolest school gear or eco-friendly play things, Shopify is your go-to making it a breeze to sell anywhere and everywhere. And guess what?

[00:26:18] Shopify's checkout outperforms others by 36% Shopify magic. It's an AI genie that helps you whip up engaging content in no time. From blog posts that pop to product descriptions that dazzle answering FAQs like a boss even pinpointing a prime. Time to hit send on your email blast. The best part is it's all free for Shopify Sellers.

[00:26:35] With Shopify single dashboard, manage orders, shipping, and payments from anywhere. Sign up for

[00:26:39] Mustafa Suleyman: a $1 per month trial period@shopify.com slash Jordan in all lowercase. Go to shopify.com/jordan now to grow your business no matter what stage you're in. shopify.com/jordan.

[00:26:51] Jordan Harbinger: If you're wondering how I managed to book all these great authors, thinkers, creators, and just straight up geniuses every single week, it is because of my network.

[00:26:58] That is the circle of people that I know, like, and trust and, and that know, like, and trust me, that's perhaps more important. And now I'm teaching you how to do the same thing. Build the same thing for yourself in our six minute networking course over@sixminutenetworking.com. I know that networking is something most of you don't like to do that, I get it.

[00:27:14] You don't wanna be a used car salesman. This course is non cringey. It's down to earth. It's going to make you a better colleague and a better friend, not an annoying guy or gal in the office or in your career or in your space. It just takes a few minutes a day and many of the guests on the show subscribe and contribute to the course.

[00:27:30] So come on and join us. You'll be in smart company where you belong. You can find the course@sixminutenetworking.com. Now back to Mustafa Suleman. It really is amazing to think that yes, you're right. The creativity thing blew me away looking at some of these image generators. I couldn't believe that somebody, somebody posted something.

[00:27:50] This is literally maybe a year or two ago at most. Look at this AI created image, and I thought, well, okay, but how does it create the image? Surely it just had an image and then changed some of the things in the image and then redrew it and it's like, no, someone asked this to draw, I dunno, Jordan Harbinger in front of a communist flag standing on a mountain and it's like, there it is in a few seconds.

[00:28:14] That was really mind blowing, this kind of thing, because if we can do that with still images, and you mentioned now with real time video, that just eliminates a ton of work. But also eventually you're not gonna have to ask it to do anything. It's just gonna start creating things. I mean, you could easily, I'm sure already craft an AI that would just start making things.

[00:28:32] According to your own preferences and then continue to do that. Uh, mark Andresen also gave the example of, instead of watching something on Netflix and they hope to get it right, you just tell net Netflix what you like, or it already knows. 'cause you've already watched 10,000 things on Netflix over the past, you know, 30 years.

[00:28:46] By that point it just says, we've made a show for you. It's kinda like Game of Thrones, except it's got that futuristic dystopian stuff. All the dragons are robots and it takes place in space because you like Star Wars and you're just like, I'll watch that. Right? And then after the first episode it's like, hey, you were, your eyes were more engaged when the dragons were fighting.

[00:29:03] So the next episode's gonna have way more of that kind of conflict. Oh, you don't like the space stuff and zero gravity. Alright, fine, we're gonna bring it back down to Earth in the next episode because you're more, and it's just gonna be able to do that kind of thing. And people will, I of course say, well, how is it gonna know what you really like?

[00:29:18] To your point, I think when people have said, Hey, these are, they're not humans. They can't read emotions. I think now I. Computers are better at reading emotions than humans are in tests. Like a robotic doctor could actually have a better bedside manner than a human doctor who's actually really good at their job.

[00:29:35] Mustafa Suleyman: Yeah, you're totally right. And that kind of personalized content generation is definitely coming. I mean, it's actually what we are trying to do with text and image and articles with pi, right? So PI actually generates you every morning a news briefing now that's personalized to you, five stories in spoken text with a nice image to go with it summarizing what's happened in the news.

[00:29:57] And then you can actually talk about the news with PI and based on how you react to the different stories. You know, you may say, oh, I'm really not interested in that kind of sport. Or, Mm-Hmm, I'm sick of hearing about this war that's going on, or, I'm really into bicycles. And you know, the next day pie's gonna produce something that is closer to what you like or what you're interested in, and.

[00:30:19] That is in a way where we're already at, right? So let's not get too carried away here. I mean, that's what a podcaster does. That's what a content creator does on TikTok, right? They're constantly trying to produce things which are more interesting and surprising and educational to people. And so we are now just kind of automating and speeding up that process.

[00:30:36] But you're right. I think the thing that we have to think about as a society is where are the boundaries and where are the limits? How do you contain this? Like what is off limits? There have to be some limits, right? What subject matter? What style of persuasion is it okay, if just I get to control? Do I get to consume whatever information I want just as an individual?

[00:30:56] Should it be entirely free and decentralized? Clearly, we don't want it to be topped down and run by a tiny number of companies, right? We also don't want it to be run by a tiny number of governments that can say, you know, censor this, that, and the other. I mean, we can see what's happening in China as a example of a way that we don't want to live, right?

[00:31:14] So no one has the answer. So if anyone comes to you and is lecturing you about, well it should be this, this is the problem, that's the criminal. The truth is, none of us fully know exactly what the right step to take is next. But the more we sort of talk about the risks and the more we proactively lean into those conversations and, and not, you know, like you said earlier, put your head in the sand.

[00:31:36] Right. You know, in the book I tried to frame it around this pessimism aversion trap, I think it's particularly an issue in the US where there's such a desire to believe that the future is gonna be better and the kind of bias towards optimism that I think it leads people to just be afraid of potentially talking about dark outcomes.

[00:31:55] Like we have to talk about the potential ways in which things can go wrong so that we can proactively manage them and so we can actually start putting in place checks and balances and limits and, and not just have a bias towards optimism that leads to. Us missing the boat when it comes to the consequences that affect everybody.

[00:32:13] Jordan Harbinger: This is wise because look, when I was a kid, I had an Apple two C. It was the kind of computer we had at school. I had one at home 'cause my mom was a teacher, so we got one. It had 64 kilobytes of ram. Now I think I've got 64 gigabytes of RAM in my gaming laptop over here, which is for people who don't know, it's a hell of a lot more within memory, I think I drove to a computer store and I bought a 420 megabyte hard drive and I remember getting home and going, I'm never gonna fill this thing up.

[00:32:42] And now if I go and I download a game, the update to the game that has like bonus graphics on it or something is way more than 420 megabytes. It's probably 42 gigabytes or something like that, right? That that hard drive wouldn't even scratch it. But it would also take me three hours to write to that hard drive or more so.

[00:33:01] This is in part due to something called Moore's Law when it comes to processors. Right. So, Moore's Law, can you first of all tell us what Moore's Law is, and then naturally my follow up is, is there a Moore's Law for ai?

[00:33:12] Mustafa Suleyman: Yeah. Great. Great question. So Moore's Law was predicted by this computer engineer, Gordon Moore, who was the founder of Intel that manufactured computer chips back in the late fifties.

[00:33:25] He predicted that transistors or computer chips were gonna get radically cheaper half in cost every year for the next, you know, 60, 70 years. Oh wow. And the crazy thing is that is exactly what's happened. And so we've been able to cram more transistors onto the same square inch for the same price. And so we've just seen this.

[00:33:50] Reduction year after year after year in the cost and increase in the density of transistors, which basically is what you're describing is your hard disk is still the same size. Mm-Hmm. In fact, in many cases actually got smaller, right? Sure. You now have a thumb drive, which is the size of, you know, your SSD back in the day, right?

[00:34:07] Your 420 mega by SSD. So that has been the main thing that has been powering this massive revolution because for the same price, we can store more, process more, et cetera, et cetera, which means we can have photorealistic graphics, which means we can have these AI models now that have access to like all the information on the web and super amounts of knowledge.

[00:34:28] So in the context of ai, there is a more extreme trend than that, right? Which is that, as I mentioned earlier, this 10 x increase in the amount of compute used to train the cutting edge AI models per year. So instead of doubling per year, which is the Moore's law trend. We're increasing the amount of compute by 10 times per year because in this case, we don't need the compute to be smaller.

[00:34:52] We can just daisy chain more computers together. So our server farmer inflection, for example, is the size of four football pitches. Wow. It's absolutely astronomical. Uses like 50 megawatt of power and you know, so you look at it, it's like absolutely mind blowing. It roars like an engine. And all of that is, is really just graphics cards.

[00:35:15] You know, just like you have in your, you know, if you have a desktop gaming machine, you might have a GPU graphics card. We just daisy chain tens of thousands of these up together so that they can do parallel processing on, you know, trillions of words from the open web. Every time PI produces one word, when you are in conversation with it, it does a lookup of 700 million other words.

[00:35:39] That's bananas. I mean, it kind of lights up or activates or kind of pays subtle attention to 700 million words every time it look, it produces a new word, obviously. So when it's producing, you know, paragraphs and paragraphs of text as a huge amount of computation, that is the trend that is accelerating much, much faster than Moore's Law and is gonna continue for many years to cut.

[00:36:02] I would

[00:36:03] Jordan Harbinger: assume at some point the AI itself will figure out how to make that process more efficient because it's learning everything that there is to know from at least that there is on the internet, which is pretty close to everything, it just seems.

[00:36:15] Mustafa Suleyman: Yeah, I mean we, we have that today, like so we have that server farm that I described to you.

[00:36:21] We train one giant AI out of that server farm and we actually use it to teach and talk to smaller ais, which are cheaper for us to run in production. When you get to chat to it. Because it's more efficient for us to have, rather than paying tens of thousands of humans to talk to our small ais to teach them, which we do do as well.

[00:36:43] We have 25,000 AI teachers. Wow. From all walks of life and all backgrounds and all kinds of expertise. And they talk to the AI all the time, and they're paid to give it instruction. Say, this is factually incorrect. This isn't very kind. This is what funny looks like, et cetera, et cetera. Now we're actually getting the AI so good that it can do the job of the AI teacher better than the human AI and teach these smaller models to, you know, behave well.

[00:37:09] So, you know, what you described in a way is kind of already happening.

[00:37:13] Jordan Harbinger: That reminds me of the, the way podcasts work. In brief, people think, oh, I download this from your server, but not really. Right? So I upload this to a server, which is probably on one of Amazon's data centers. But if somebody in Japan downloads this episode of the podcast.

[00:37:30] There's a copy of that file cached somewhere on servers that are probably, I don't know, outside of Tokyo somewhere. And then if somebody else in Japan downloads it, they don't connect to my server in the United States. They connect to that server in Japan. Japan server says, Hey, is this file the same one that you're still putting out over there in America?

[00:37:47] And our, our network says, yeah, but we want to put this ad in there that's in Japanese. 'cause the other one that ran was in English. Just switch that out. And the server essentially says, okay, cool. And gives 'em the exact same file. It sounds like that's a little bit of how pie works, right? Is this, it's almost like, oh, other people have looked up recipes in the United States.

[00:38:04] We don't have to ping the main guy right over there and that giant multi football pitch data center. We understand how to tell 'em how to cook this soup. This has been done here it is. Yeah. I know I'm oversimplifying it, but this sounds similar. That's

[00:38:18] Mustafa Suleyman: actually a great, uh, metaphor. I mean, a a another way of putting it is that you don't need to ask the.

[00:38:24] Professor of computational neuroscience, how to make the recipe for spaghetti bolognese. Mm-Hmm. You can go to a, an expert in that kind of area that doesn't require, you know, 20 years of training in neuroscience to, uh, you know, become that expert. So that's exactly the concept. Just like we deliver content to different parts of the web, we have different specialist ais that are really small and efficient at answering different types of questions.

[00:38:50] Tell me about the

[00:38:51] Jordan Harbinger: video game playing AI machine, for lack of a better word, that you designed back in the day, because this was kind of, it sounds like one of your first experiences seeing AI do something that was, it's funny, I'm putting this in air quotes, truly amazing because it's something you do when you're nine years old and you're playing the same video game, but still at that point.

[00:39:10] Right. Was totally mind blowing.

[00:39:12] Mustafa Suleyman: Yeah. I mean, that was more than 10 years ago now, 2013, and we trained in AI to play the old school Atari games. So things like breakout and pong for example, where you have two paddles and you bat them back and forth or breakout where you have to bounce a ball up and down with a paddle at the bottom that you get to control left and right to knock down the bricks or space invaders where you, you know, shoot the enemy ships.

[00:39:38] And the crazy thing about this is that instead of writing a rule that said, you know, if you are in this position and the ball is coming at these degrees, then move the paddle left one degree, blah, blah, blah. You basically allow the AI to just watch the screen and randomly move the paddle back and forth left and right until it accidentally stumbles across and increase in score.

[00:40:01] And then it's like, oh, that's pretty cool. I managed to increase the score. How did that happen? I'll try and do that next time I'm in that position. And so it's through random self play that you know, millions of times playing against itself. 'cause it sees all the screens, 24 frames a second frame by frame, all the pixels.

[00:40:19] It's able to learn a pretty good strategy of playing the game. And then one day we saw that it had actually learned a strategy called tunneling. Mm-Hmm. Where it would ping the ball up one side as often as possible and try and aim it up in the same place. And then that would force the ball to bounce behind the bricks back and forth, up and down, and get kind of maximum score with minimum effort.

[00:40:43] And that was not a strategy that most human players knew about. Right. Like Mo, most of us didn't really discover that. Some of us did, but you know, I certainly didn't. And that was pretty mind blowing. I was like, wow, these things can not only learn to do it well, but it can actually learn new knowledge or new strategies or discover.

[00:41:03] Techniques and tricks, which could actually be useful to us. And that was after all why we started building ai. I mean, that's what we want from ai. We want AI to be able to solve our big problems in the world. You know, we want it to help us tackle climate change and improve drugs and improve healthcare and, you know, give us self-driving cars, and we want to solve these massive problems that we have in the world of trying to feed 8 billion people and growing and so on, right?

[00:41:29] So to me, that's always been my main motivation. And when I first saw that, that was like, uh, the first sign that we were onto something back, uh, 10 years ago. The reason

[00:41:39] Jordan Harbinger: that's so amazing, and I think it's easy to gloss over this and go, so what it learned from the best players, and it copied the strategy, but that's not what happened, right?

[00:41:46] It didn't see, right. Somebody who was really good at Brickbreaker and they go, oh, okay, what he does, he breaks the side and then he gets the ball stuck in there, and then it, the, it does the rest of the work on its own and you can't really lose it. Figured that out through trial and error, which is really incredible because you.

[00:42:03] Might have to play brickbreaker for a few weeks, months, or even years before you come across that strategy by accident and then go, oh, I need to replicate that so this can figure it out in in seconds, potentially. Something like that. And then we also, now we want, we want AI to figure out the equivalent of tunneling for, I don't know, cancer research or something in quantum physics, right.

[00:42:22] That we would never figure out, because humans haven't been there yet. And the AI goes, huh, if I want this particle to last longer than a few milliseconds in controlled environment. I need to do all these other things and badda, boom, badda bing. Now I can make elements that don't exist that can be used to create power, for example.

[00:42:38] Generate

[00:42:39] Mustafa Suleyman: power. Yeah, I think that's totally right. That's the ambition. And I think it's a very noble one because you know the world today, we got a lot of challenges. Mm-Hmm. Whether it's food or climate or healthcare, I mean, the prize is big and we need, we need assistance in trying to invent our way out of these challenges.

[00:42:56] And all that we've got so far is, is our human intelligence and everything that is a value in the world today is a product of us being smart at predicting things. And that's basically what these ais do. They absorb tons of data and information and they make great predictions. So the thesis is, well, we could maybe scale up this prediction engine for the next couple decades and really have some massive impact.

[00:43:20] Jordan Harbinger: Are you able to explain just briefly how AI works? Because you, you mentioned before it searches 700 million words and in the Mark Andreesen episode he was like, ah, it's like a really fancy or smart auto complete, which I understand what auto complete is because I use Google and my phone tries to guess what I'm gonna say and it's, it's often right.

[00:43:39] But that doesn't really scratch the itch for me because then it's just reliant on looking at what humans have done, which is not really what we're saying AI does. Right.

[00:43:50] Mustafa Suleyman: So I think that's right. Look it, it is very difficult to describe because it's hard for us to really intuit and deeply understand very large numbers and very large information spaces.

[00:44:02] So I think the first thing to try to wrap your head around is that one of these large language models reads many, many times everything that has been digitized on the open web. And so this is trillions and trillions of words. You know, books and blog posts and podcasts and YouTube downloads and everything that, where there's text, it's consumed it.

[00:44:26] And what it's learning to do is it covers up the future words. And given the past words, it predicts which word is likely to come next. So it's almost like it memorizes the whole thing, and then you test it and you say, given this phrase, the cat sat on the mm-Hmm. What is the probability that the next word is head, chair, car, plane, road, banana, continent.

[00:44:55] Right? And so there's gonna be some probability assigned to every single one of those, those words, even the really, really weird words that have never appeared after that sentence. And of course the most likely one is, is Matt, right? Mm-Hmm. But that's a very simplistic. Description because not only is it good at predicting or auto completing which word is gonna come next, it's able to do that with reference to a stylistic direction.

[00:45:23] So just as you say to an image generator, produce me a banana in the shape of an owl in the style of Suzanne, right? Mm-Hmm. Now you might be able to, ima, you know, imagine that kind of weird combination in your head. What the AI is able to do is to take those three concepts, and not just the concept, the plain word banana, but actually its entire experience of banana.

[00:45:51] Every single setting in which banana has arisen, right? All the different kinds of combinations and shapes and styles, and has this very multidimensional hazy representation of banana and then it's able to interpolate, which is predict the distance between banana and owl. That's a very powerful thing because it's a stylistic, it's a position on the curve.

[00:46:14] It could be very, very like owl. It could be very, very like banana. Now imagine that you add in all the other words. Imagine the owl is flying. Imagine it's big and red. Imagine it's a banana that's going off. Imagine it's banana that's been thrown off the edge of a building. You know, now we are honing in and we are, we are reducing the size of the search base.

[00:46:34] It's almost like adding filters to reduce the size of all possible things. That's just a very difficult thing to grasp when it's massively multidimensional. I've only described it in the context of two or three concepts, but now imagine that it's like hundreds of concepts or thousands of concepts of stylistic control.

[00:46:50] And as the models have got larger and they get more access to more compute, you can have more fine-grained control. They become more, and that's why they're more accurate, right? They're more useful because they're, they're able to attend to multiple, you know, sort of stylistic directions simultaneously.

[00:47:10] Jordan Harbinger: You are listening to the Jordan Harbinger Show with our guest, Mustafa Soleman. We'll be right back.

[00:47:15] This episode is sponsored in part by Better Help. How's your social battery these days? Are you drained or are you all charged up? As the weather warms up, there's this rush to fill our social calendars again, but what is your ideal level of socializing?

[00:47:27] Do you need the buzz of people around you? You need a little quiet me time. Is that more your speed? That's kind of where I fall. Therapy, like what Better Help Offers can be a real eye-opener here. It's not just for navigating big life challenges. It's about understanding your social needs, setting healthy boundaries.

[00:47:41] Learning how to recharge effectively better Help brings therapy into the 21st century. So it's all online, it's all flexible, it fits around your life. You fill out a little survey, get matched with a licensed therapist. You can switch anytime if you need to. It's convenient, it's tailored to you, and it might just be the boost that y'all need to manage your social energy better.

[00:47:57] Find

[00:47:57] Mustafa Suleyman: your social sweet spot with better help. Visit better help.com/jordan today to get 10% off your first month. That's better, HEL p.com/jordan.

[00:48:06] Jordan Harbinger: This episode of the Jordan Harbinger Show is brought to you by Nissan. Ever wondered what's around that next corner, or what happens when you push further?

[00:48:12] Nissan SUVs? Have the capabilities to take your adventure to the next level. As my listeners know, I get a lot of joy on this show talking about what's next. Dreaming big, pushing yourself further. That's why I'm excited once again to partner with Nissan because Nissan celebrates adventures everywhere.

[00:48:26] Whether that next adventure for you is a cross country road trip or just driving yourself 10 minutes down the road to try that local rock climbing gym, Nissan is there to support you as you chase your dreams. So take a Nissan Rogue, Nissan Pathfinder, or Nissan Armada and go find your next big adventure with the 2024 Nissan Rogue.

[00:48:41] The class exclusive Google built-in is your always updating assistant to call on for almost anything. No need to connect your phone as Google Assistant, Google Maps and Google Play Store are built right into the 12.3 inch HD touchscreen infotainment system of the 2024 Nissan Rogue. So thanks again to Nissan for sponsoring this episode of the Jordan Harbinger Show, and for the reminder to find your next big adventure and enjoy the ride along the way.

[00:49:03] Learn more@nissanusa.com. If you'd like this episode of the show, I invite you to do what other smart and considerate listeners do, which is take a moment and support our amazing sponsors. All the deals, discount codes, and ways to support the show are searchable and clickable over at Jordan harbinger.com/deals.

[00:49:19] And if you can't remember the name of a sponsor, you can't find the code, shoot me an emailJordan@jordanharbinger.com. I am more than happy to surface that code for you. Yes, it is that important. Thank you for supporting those who support the show. Now for the rest of my conversation with Mustafa Suleman.

[00:49:36] As this stuff gets more complex, are we gonna have trouble, or perhaps we're already there getting under the hood of an LLM or of an AI as we know it today, and see why decisions are made. Because if I look at a human brain, right, and I go, Hey, brain, why did you buy that jacket when you already have lots of jackets and you live in California?

[00:49:56] And my brain goes, well, there's gonna be an occasion where I really need a brown suede jacket, and this one has fine details and I like it, and it's, it's gonna come in useful. And, uh, I don't really care. I just really wanted the jacket right. And I can do that. And, and I'm, I'm quite self-aware that I bought a jacket that I didn't freaking need.

[00:50:11] And now I'm really trying to rationalize that purchase because it was expensive. This is the best my brain can do. And I'm like a reasonably qualified human. I'm le leading a mostly successful life, right? What happens when we're looking at a brain a, a AI that is so much more sophisticated than our own?

[00:50:29] But it's being terrible in some way. Are we gonna be able to get in there and diagnose that? Or is it gonna be just too complicated of a black box? Well, it's,

[00:50:38] Mustafa Suleyman: that's a cool question, and I think you kind of nailed the answer in your question, which is that humans hallucinate all the time. Yeah. Our main mode of communication is to retrospectively invent some narrative that seems to fit the bill, right?

[00:50:55] We're constantly being creative and making stuff up. In fact, when you remember something, you don't really remember. Yeah, what did you have for breakfast this morning? You have a very vague, loose memory. Maybe you can get it. What did you do two weekends ago? You're gonna be creating all kinds of stuff that is plausible and vaguely within.

[00:51:14] We make things up all the time, and that's what creativity is. That's what a hallucination actually is. We don't have very good ways of inspecting inside a human brain. You can wax somebody in an FMRI scanner, but it's pretty crude and. It's not reliable. So the way that we trust one another is that we observe what you say and what you do.

[00:51:36] And if what you say and what you do is consistent with what you have said you're gonna say and said you're gonna do, then over time we build up trust because we have that continuity between intent and outcome. Mm-Hmm. And that's the behaviorist model of psychology, right? We observe the output and we focus less on the introspection and the inner analysis.

[00:51:59] And I think that practically speaking, that's gonna be the standard to which we hold a lot of these ais for the, you know, foreseeable future. Now you could ask, which I think is what you're getting at, which is in the long term, well, isn't this thing gonna be really good at deceiving us? Because it's just gonna get smarter and smarter and smarter.

[00:52:17] And I think, you know, maybe. The good news is we can actually interrogate these models better than we can interrogate humans. So it's not perfect. We are certainly developing methods of identifying when an AI has been deceiving. Hmm. When it's misrepresented something, you know, where in the model different types of ideas or concepts sit, and what the causal relationship was that led up to a particular output.

[00:52:43] The challenge is that's very early and early research, but the good news is, is it's software. And so we have a better time of investigating and interrogating software than we do sort of the biology of the human mind.

[00:52:55] Jordan Harbinger: That does make a lot of sense, right? Because if I go back and ask myself why I got that jacket, I have to really, even if I'm really trying to be honest with myself, I'm still gonna sugarcoat the answer so I don't feel like a dumb ass, right?

[00:53:07] But if you ask the AI why it said something prejudiced or racist sounding, it might actually just go, oh, because this training data set over here says that. This kind of person often does this kind of thing and you're like, okay, okay, we gotta take that out of the soup. That's not the kind of data that we want floating around in here.

[00:53:26] It's not accurate. We don't want that affecting your decisions in the future. And the AI goes, okay, it's as good as gone. Right. It can ignore that. I can't do that in my brain. I can't stop buying jackets. Yeah,

[00:53:37] Mustafa Suleyman: exactly. And that's actually one of the weaknesses of being human in a way that we have emotional drives.

[00:53:43] And at the moment, AI don't have emotional drives and it's unclear whether they need them. So going back to my list of capabilities, that should be off limits because they potentially cause more risk. I listed autonomy, I listed recursive self-improvement, whether AI can get better over time on its own, on its own right.

[00:54:02] And I listed that it had its own goals. It could set its own goals. And you know, I would add to that has emotional drives. I mean, it's not clear that we want AI that have intrinsic motivation. Ego, impulse, desire to do things or go places. Like really these should be treated as tools that work for us.

[00:54:23] They can still be very, very capable. But adding drives, I'm not clear that I see the justification that that would be a massive benefit to society so far. So these are the kinds of tricky conversations we have to have. Like what is the benefit there? It's not clear. I think we can have an amazing scientist, an amazing teacher.

[00:54:42] I think we can have amazing knowledge workers that can be useful to businesses and be creative and so on without actually having emotional drives. The

[00:54:49] Jordan Harbinger: idea of a computer or, well, an AI having emotional drives is something straight out of Star Trek or something like that. I mean, just thinking about a computer that has an ego.

[00:54:58] I mean, I say computer, I'm oversimplifying it because you know, a lot of laymen are listening to this like myself. And the idea that the computer would go, ah, but I have to be right about this one thing. Or You're making me feel bad, I'm gonna destroy your whole civilization. Like we don't, yeah, we don't want that.

[00:55:13] We don't want that. That sounds quite terrible. It doesn't

[00:55:15] Mustafa Suleyman: take it genius to figure out that that would be a bad outcome and that we might want to say that that's off limits.

[00:55:21] Jordan Harbinger: Yeah. Especially as we create this amazing tool or set of tools, if we can even call it that, that's so much smarter than us. You mentioned in the book something called the Gorilla Problem, and by the way, folks, if you buy the book, please use our links in the show notes and help support the show.

[00:55:34] The gorillas that we see, of course, are bigger and stronger than us, but it's them who live in zoos. We're smarter so we can sort of trick them into getting into a cage, and then we put 'em in the zoo and they can't get out. We're currently masters of the ocean in the land. The air increasingly even of space.

[00:55:50] So what happens though when we create something that's 10,000 times smarter than us in pretty much every measurable area? You know, the idea is that maybe it'll put us in a zoo and I just hope the zoo looks a lot like where we are right now. Although now, now it sounds like I'm talking about simulation theory.

[00:56:06] Maybe we're already in the zoo. I don't know.

[00:56:09] Mustafa Suleyman: Look, I, I think the good news is 10,000 times smarter than us is a long way off. And so we've got time. Is it okay to figure out that problem? I believe so. I mean, some people think that it's closer to 20 years. I think it's hard to say that it's like maybe more 40 or more, but you know, beyond that time horizon, it gets very hazy.

[00:56:28] It's hard to judge. He doesn't feel like we're on the cusp of that anytime soon. And I know that's like not the most scientific analysis, but just in instinctively, that's where I think me and most of the field are at at the moment. Okay. I think the point to say is we wouldn't be able to prove that a system that is that powerful could be contained.

[00:56:49] Would be safe. Mm-Hmm. And therefore, until we can prove unequivocally that it is, we shouldn't be inventing it. Mm-Hmm. That, I think is a pretty straightforward common sense reality, right? We can still get tons and tons of benefit from building these narrow, practical, applied AI systems. They'll still talk to us.

[00:57:12] We'll have personal assistance. You know, they will automate a bunch of work that we don't wanna do. They'll create vast amounts of value. We'll have to figure out how we redistribute that value so that everybody ultimately will end up with an income. But that does not mean that we have to create a super intelligence.

[00:57:28] Just means that we would've created a huge amount of value in the world. And the current structure of society and the politics and governance around that is gonna look very different to what it's

[00:57:37] Jordan Harbinger: today. I can get behind that. I think a lot of people can get behind that. I think the only I. Place where people might take a little bit of issue is, okay, we should probably not build that.

[00:57:47] And then, you know, China's going, okay, fine. Don't build it. We're probably gonna try to build it though. And then I think you'd said something along the lines of, if one side is not in an arms race, but the other side thinks that they're in an arms race, then

[00:58:01] there's

[00:58:01] Mustafa Suleyman: an arms race. I did say that, and I think that is true, which is a trap that we have to unpick ourselves from because Mm-Hmm.

[00:58:09] The other side doesn't wanna self-destruct either. Right. They're not crazies. Thankfully, even Putin doesn't want to commit suicide. Mm-hmm. Right. Everybody has a survival instinct. And that is what has led us to create relative global peace in the post world war era with nuclear weapons. You know, this idea of mutually assured destruction.

[00:58:34] Has actually been an incredible doctrine, right? Even though there have obviously been a huge amount of suffering and war over the last 70 years, we haven't been at World War, and that's great news because it shows that everybody will ultimately act like a rational actor if their future life is truly threatened.

[00:58:55] So I think that the argument that I've often made and others in the field is that a system that powerful is unlikely to follow your instruction. Mm-hmm? To obey you as a ruler as much as it is you as an enemy, right? Because at that point, it's not gonna care whether you are China or India or Russia or the uk or you are a government, or you're just a random academic.

[00:59:17] There's gonna be a question of how you actually constrain something that powerful, regardless of where you are from. I think that that's an initial starting point for thinking about how, you know, we all like add some serious caution here. If and when we get to that moment in decades to conflict. Just to be clear, we're nowhere near that right now, but you know, it's a question that we have to start thinking about.

[00:59:37] Jordan Harbinger: Yeah. It's scary to see the prediction that AI could then self-improve, right? Because it seems like as soon as it gets to that point, that curve could go so fast that we just wake up one day and it surprises everybody. Or is that sort of a sci-fi concern that I don't really need to have?

[00:59:55] Mustafa Suleyman: I think it's a sci-fi concern.

[00:59:57] We haven't seen that kind of, it's called an intelligence explosion. There's just no evidence that we've seen that kind of thing before. However, the more we deliberately design these ais to be recursively, self-improving, like to close the loop and they update their own code and they interact with the world and then update their own code, and then if you just give a system like that, infinite compute, right?

[01:00:18] Because ultimately the good news is they run on physical things, right? They still don't like information space bits, but they're actually grounded in atoms. Those atoms live in servers. Those servers live on land, which is regulated by governments. And so there is a choke point around which governments, the democratic process, you know, people in general can hold these things accountable and can rate limit progress.

[01:00:44] And that's obviously good news. So I don't see this happening in a garage, you know, lab

[01:00:49] Jordan Harbinger: anytime soon. Right. That does make sense. It's kind of like if a car was sentient, it still needs gasoline and that gasoline still has to come from refined petroleum, which has to, you still have to dig for the petroleum and have the oil well.

[01:01:00] So it's like the AI might be able to make itself smarter, but it knows, okay, I need six more of these football field size data centers to do that. It can't just sneakily get those overnight. It has to. Somehow trick a nation state, right into creating that for it or something, and then do something nefarious with it.

[01:01:20] And which gives us, in theory a lot of opportunity to go, do we wanna do this? Is this a good idea? Maybe we shouldn't do this. Maybe we need a safeguard. Maybe we need an off switch that's physical where somebody can go rip this plug out of the wall if this thing starts going cuckoo

[01:01:34] Mustafa Suleyman: on us. And just to be clear like that is what's already happening.

[01:01:37] Right. So this company, Nvidia, that makes the AI chips Mm-hmm. The GPUs I described earlier, you know, in the last year the share prices, I don't know, gone up three times or something crazy. It's the one of the big trillion dollar companies now. And those chips were regulated by US government last year.

[01:01:56] Right. So that they couldn't be exported to China, the very cutting edge chips. I think there's already a pretty good understanding of the potential for this to be used for military purposes as well. And you know, government has moved fast on this, proactively intervening to protect. National security and now as a catch up, starting to think about how it actually affects us domestically as well.

[01:02:19] So I agree with you. I think that there's gonna be friction here naturally in the system that gives us time to, you know, see, look, are we just fool ourselves and being doomers and exaggerating this kind of nonsense? Is it actually just nuts or is it actually real, right? And is it actually happening? And do we need to take some other interventional measures to make sure it turns out the right way?

[01:02:43] If we do