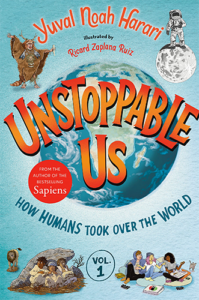

Yuval Noah Harari (@harari_yuval) is a historian and the bestselling author of Sapiens: A Brief History of Humankind, Homo Deus: A Brief History of Tomorrow, and 21 Lessons for the 21st Century. His latest book, Unstoppable Us, Volume 1: How Humans Took Over the World, is out now.

What We Discuss with Yuval Noah Harari:

- At a time when information is unlimited, has the idea that “knowledge is power” become obsolete? It all depends on how careful we are about ingesting the right kind of information — and knowing which kind to avoid.

- Religious texts and nation-defining constitutions are only as useful as their human interpretations — which can shift radically over the course of generations.

- In what ways might technology like artificial intelligence and genetic engineering threaten a humanist-centered approach to the future?

- How algorithms that guess new ways to sell us things we don’t need can be modified to put us on the hit lists of authoritarian governments.

- What humanity really needs to do in order to avert climate crisis and World War III.

- And much more…

Like this show? Please leave us a review here — even one sentence helps! Consider including your Twitter handle so we can thank you personally!

Now, in the looming shadow of a potential World War III, in the smoldering fumes of a climate in crisis, in the ever-widening gap between the haves and have-nots, under the thrall of technology ostensibly built to make our lives easier that might just subtract humanity from the equation altogether, it’s reasonable to wonder if progress is poised to leave us in the debris of its wake.

On this episode, we’re joined by historian Yuval Noah Harari, author of Sapiens: A Brief History of Humankind, Homo Deus: A Brief History of Tomorrow, 21 Lessons for the 21st Century, and Unstoppable Us, Volume 1: How Humans Took Over the World. Here, we discuss the obsolescence of the notion that knowledge is power, how the interpretation of texts written hundreds or even thousands of years ago dictate how we live in a world our ancestors could never have fathomed, why we should be concerned that technology like artificial intelligence and genetic engineering threaten a humanist-centered approach to the future, what humanity really needs to do in order to avert climate crisis and World War III, and much more. Listen, learn, and enjoy!

Please Scroll Down for Featured Resources and Transcript!

Please note that some of the links on this page (books, movies, music, etc.) lead to affiliate programs for which The Jordan Harbinger Show receives compensation. It’s just one of the ways we keep the lights on around here. Thank you for your support!

Sign up for Six-Minute Networking — our free networking and relationship development mini-course — at jordanharbinger.com/course!

This Episode Is Sponsored By:

- Airbnb: Find out how much your space is worth at airbnb.com/host

- SimpliSafe: Learn more at simplisafe.com/jordan

- Shopify: Go to shopify.com/jordan for a free 14-day trial

- BetterHelp: Get 10% off your first month at betterhelp.com/jordan

- Collective: Find out how many thousands of dollars you can save per year at collective.com

- Into the Impossible: Listen here or wherever you find fine podcasts!

Miss the show we did with award-winning cybersecurity journalist Nicole Perlroth? Catch up with episode 542: Nicole Perlroth | Who’s Winning the Cyberweapons Arms Race? here!

Thanks, Yuval Noah Harari!

If you enjoyed this session with Yuval Noah Harari, let him know by clicking on the link below and sending him a quick shout out at Twitter:

Click here to thank Yuval Noah Harari at Twitter!

Click here to let Jordan know about your number one takeaway from this episode!

And if you want us to answer your questions on one of our upcoming weekly Feedback Friday episodes, drop us a line at friday@jordanharbinger.com.

Resources from This Episode:

- Unstoppable Us Volume One: How Humans Took Over the World by Yuval Noah Harari and Ricard Zaplana Ruiz | Amazon

- Sapiens: A Brief History of Humankind by Yuval Noah Harari | Amazon

- Homo Deus: A Brief History of Tomorrow by Yuval Noah Harari | Amazon

- 21 Lessons for the 21st Century by Yuval Noah Harari | Amazon

- Yuval Noah Harari | Website

- Yuval Noah Harari | Twitter

- Yuval Noah Harari | Facebook

- Yuval Noah Harari | Instagram

- Yuval Noah Harari | YouTube

- Yuval Noah Harari: ‘The Idea of Free Information Is Extremely Dangerous’ | The Guardian

- Yuval Noah Harari on Money — The Apogee of Human Tolerance and the Destroyer of Honour, Loyalty, Morality, and Love | The Victorian Web

- Yuval Noah Harari On Why Clarity is Power | The Rich Roll Podcast

- Definition of Humanism | American Humanist Association

- Technology Can Make Us Superbeings . . . But Be Careful What You Wish For | The Times

- Yuval Noah Harari Argues That AI Has Hacked the Operating System of Human Civilisation | The Economist

- Jaron Lanier | Why You Should Unplug from Social Media for Good | Jordan Harbinger

- Nina Schick | Deepfakes and the Coming Infocalypse | Jordan Harbinger

- Her | Prime Video

- Yuval Noah Harari: “Eunuchs Were Already Bioengineered, and It Wasn’t an Improvement for Everyone” | USA News

- Jamie Metzl | Genetic Engineering and the Future of Humanity | Jordan Harbinger

- Amy Webb | Changing Lives with Synthetic Biology | Jordan Harbinger

- Rob Reid | Synthetic Biology for Medicine and Murder | Jordan Harbinger

- Rob Reid | Why the Future is a Good Kind of Scary | Jordan Harbinger

- Matt McCarthy | The Race to Stop a Superbug Epidemic | Jordan Harbinger

- The Surgeon Who Operates from 400km Away | BBC Future

- Nita Farahany | Thinking Freely in the Age of Neurotechnology | Jordan Harbinger

- Yuval Noah Harari Worries That LGBT Acceptance Will Lead To Backlash | The Times of Israel

- Uganda Enacts Harsh Anti-LGBTQ Law Including Death Penalty | Reuters

- It’s Not Enough for Ukraine to Win. Russia Has to Lose. | The Atlantic

- Why Ukraine Gave up Nuclear Weapons and What That Means in a Russian Invasion | NPR

848: Yuval Noah Harari | Peering into the Future of Humanity

[00:00:00] Jordan Harbinger: Special thanks to Airbnb for sponsoring this episode of The Jordan Harbinger Show. Maybe you've stayed at an Airbnb before and thought to yourself, "This actually seems pretty doable. Maybe my place could be an Airbnb." It could be as simple as starting with a spare room or your whole place while you're away. Find out how much your place is worth at airbnb.com/host.

[00:00:18] Coming up next on The Jordan Harbinger Show.

[00:00:21] Yuval Noah Harari: The combination of biotechnology on one side and artificial intelligence on the other side means that if we don't destroy ourselves in the next century or two, we are very, very likely to use these technologies to either change ourselves, our own bodies, and brains and minds to such an extent that these future entities will be more different from us than we are different from Neanderthal.

[00:00:54] Jordan Harbinger: Welcome to the show. I'm Jordan Harbinger. On The Jordan Harbinger Show, we decode the stories, secrets, and skills of the world's most fascinating people and turn their wisdom into practical advice that you can use to impact your own life and those around you. Our mission is to help you become a better informed, more critical thinker through long-form conversations with a variety of amazing folks, from spies to CEOs, athletes, authors, thinkers, and performers even the occasional drug trafficker, former Jihadi, fortune 500 CEO, or legendary actor.

[00:01:23] And if you're new to the show or you want to tell your friends about the show, I suggest our episode starter packs as a place to begin. These are collections of our favorite episodes organized by topic that'll help new listeners get a taste of everything we do here on the show — topics such as persuasion and influence technology and futurism, crime and cults, and more. Just visit jordanharbinger.com/start or search for us in your Spotify app to get started.

[00:01:45] By the way, y'all, we just started a newsletter like literally last week. Many of you are already getting it, but if not, go to jordanharbinger.com/news to sign up. Every week, the team and I dig into an older episode of the show and dissect some of the lessons from it or distill maybe some of the lessons from it. So if you're a fan of the show, you want to recap of important highlights and takeaways, or you just want to know what to listen to next, the newsletter is a great place to do that. We've got a lot more in store for the newsletter as well, none of which includes me asking or selling you a Bitcoin scam, jordanharbinger.com/news. Would love, love, love your feedback on it because again, it is new. I don't know what the hell I'm doing, and I would like to hear from you when you reply to the newsletter and tell me what I did right or wrong.

[00:02:26] All right. Wow. It's really hard to know where to start with somebody like today's guest because he has done so much. The size of his books is probably a good analogy for the magnitude of his knowledge. One of my favorite books that he wrote called Sapiens was like 460 pages. Today, Yuval Noah Harari and I will discuss AI, the Ukraine conflict, even some Bitcoin in there. This is a wide-ranging discussion with a brilliant man, and Yuval was there too, but for real, enjoy this conversation. It's a long time in the making, and I think this episode really stands out. I hope you agree. Now, here we go with Yuval Noah Harari.

[00:03:07] I really appreciate you doing the show. It's an honor to speak with you, especially, this is firmly what I assume is the window of your family time over in Tel Aviv that you're sacrificing for us. So I apologize for that, but I'm thankful for that.

[00:03:19] Yuval Noah Harari: It will come later. I mean, we are going to watch the new Succession episode after this.

[00:03:24] Jordan Harbinger: Yes, very important plans, which we will not get in the way of that. I've heard you say that the idea that knowledge is power is somewhat obsolete now because information is unlimited. Tell me about that, because it does seem like this landscape has maybe changed a little bit from a thousand years ago where information was rare.

[00:03:45] Yuval Noah Harari: Yeah, I mean, for most of history, information was rare. I mean, the main job of the education system was just to provide people with the basics, basic information about the world. And censorship worked by blocking information. Now, it's the other way around. We are flooded by enormous amount of information that we cannot handle. The main job of the education system is not to provide information. We don't need more information. We need the ability to sift through the information to tell the difference between reliable and unreliable information. This is what we need from education. And censorship, also, it works by flooding us with even more information, so we don't know what to pay attention to.

[00:04:29] And basically, it's a bit like what happens with food. We really need to go on an information diet. For most of history, our food was scarce, so you ate whatever you got. Now, even poor people in many countries, they actually suffer from too much food. And what you need is to be very careful about which kind of food you put into your body. It's the same with information. We need an information diet to be very, very careful what kind of information we are feeding our minds because very often we feed our minds junk food.

[00:05:03] Jordan Harbinger: I couldn't agree more. I mean, we have misinformation, which is sort of maybe accidentally bad information, but we also have disinformation and like you said, censorship, which is sort of the other side of that coin where it's almost like the more information we consume now, the more misinformed somebody is. My dad who reads news all day and my mom, they're lovely people, but they'll tell me something and I'm like, "Wait a minute. That is just a wildly untrue thing that you are parroting. Where did you read that?" "Oh, it's all over the news." "There's your problem right there."

[00:05:32] Yuval Noah Harari: One very basic misconception is that people tend to equate information with truth and information isn't truth. Lies are also information, fictions and fantasies are also information. Most information in the world is not about the truth, it's not about anything. It doesn't represent anything. It builds new things. So, you know, even if you think in terms of, in biological terms, Even if you think about the most basic type of information on the planet for living organisms, which is DNA. DNA doesn't represent anything. DNA builds new things. If you read our DNA, a code of the DNA code of anything, of a virus, of a zebra, you don't find the representations of the world like representations of lions and giraffes and whatever. And also not for presentation of our own body, like the heart or the kidneys or anything like that. What you find are instructions to build things, and it's the same with most of the information that people exchange between themselves.

[00:06:40] Most of the information, not just today, but throughout history didn't represent anything. So you can't really ask, is it true or not? It was building blocks to create religions and economic systems and things like that. If you think about money, for instance, Money is basically a fictional story that we create by exchanging information between us. It doesn't represent anything real in the outside world. Most of the dollars in the world, you know, they are just not even pieces of paper. They are just electronic information passing between computers. And we are willing to work hard for the entire month just to get these little bits of information moved from one computer to another computer. Because we believe the stories that the greatest storytellers in the world, the bankers and finance ministers and financial gurus tell us. Most of the time what information is doing is not telling us the truth about the world. It's creating new things in the world.

[00:07:48] Jordan Harbinger: That's an interesting point. I hadn't really thought about that, but these stories are really incredibly powerful. If you think about, I'm trying to think of somebody who hates America the most, like Osama bin Laden and those types of people—

[00:07:59] Yuval Noah Harari: Yeah.

[00:08:00] Jordan Harbinger: —even they are bought into the story of money if they found a cash of US dollars, they wouldn't light it all on fire and be like, "Yeah, down with America." They would use it, right? They would rush that thing to the nearest arms dealer or whatever and utilize that. Everybody who touches those, they would gladly slay, and then they would just take them and use them just as you or I, well, maybe they buy more weapons with it than you or I would, but they would use it in the exact same way.

[00:08:25] Yuval Noah Harari: Yeah. Because money is basically the only story that everybody believes. You know, not everybody believes in God or in the same God.

[00:08:32] Jordan Harbinger: Mm-hmm.

[00:08:32] Yuval Noah Harari: Not everybody believes in the nation, but there are very few people in the world who don't believe in money and in the same money. So whether it's Osama bin Laden, or whether it's Vladimir Putin or whether it's people in North Korea or whatever, they all believe in the story of the dollar. The key thing to understand about it, it's just a story that we believe, it's not a reality out there. The colorful pieces of paper, you can't eat or drink them. And the most dollars in the world today, they are not even pieces of paper. 90-something percent of the money is just electronic information moving between computers.

[00:09:12] Jordan Harbinger: It's like, okay, but you still — maybe I should just ask if you agree wherever you have a story, you have to have trust, you have to have humans.

[00:09:18] Yuval Noah Harari: Yes. I mean, until today, now with AI, things are beginning to be different. But at least until today, for thousands of years of history, stories were told only by human beings. And again, every story can be interpreted in many different ways. So even people who think that they don't need human institutions, they have, let's say, the infallible eternal truth in the shape of a Holy Book, what we see in the history of every religion is that it never works with without an institution. Because the same words in the same book can be interpreted in many different even contradictory ways. And then you need a human institution to decide which interpretations are correct. So even, if you think that the power lies with the holy text, which is this kind of infallible technology outside human control, in truth, the power always goes back to a human institution that decides about the correct interpretation of texts. And you see it with religious texts.

[00:10:27] You also see it with constitutions. Like you have the foundational text, which defines the rules of the game for the country. But the Constitution can never interpret itself. It's just a piece of paper with inland. So what do you make of the Second Amendment? What is the correct interpretation of the Second Amendment? You need a Supreme Court to interpret the Constitution, so a constitution is only as effective as the Supreme Court or some other institution which interprets it, or at least this is how it was until now when AI is the first technology in history that can not only tell stories by itself, but can also potentially interpret stories and texts by itself.

[00:11:18] Jordan Harbinger: That'll be interesting. I'm like a techno religion at some point, potentially emerging here.

[00:11:23] Yuval Noah Harari: Yeah.

[00:11:23] Jordan Harbinger: When you were talking with my friend, Rich Roll, this is years ago, by the way. So I'm going to give you some freedom to change your opinion if you want to, but—

[00:11:30] Yuval Noah Harari: Okay.

[00:11:30] Jordan Harbinger: You mentioned something that was along akin to human feelings, human desires and human thinking. Those are sort of the ultimate arbiter of choice.

[00:11:39] Yuval Noah Harari: Yeah.

[00:11:40] Jordan Harbinger: It's something along the lines of humanism. Maybe there's a better way to explain it than the way I just butchered it. But maybe we should start with what humanism is, because now that we have artificial intelligence and machine learning and social media algorithms, do these technologies threaten the idea that people are actually choosing anything?

[00:11:58] Yuval Noah Harari: Yeah, absolutely. I mean, humanism is the idea that, as you said, the ultimate judge in almost all fields of life, our human feelings and human desires. For instance, in politics, the government is not chosen by God. It's not inherited within a dynasty of family. But we have elections in which humans choose according to their feelings and desires. Similarly, in the economic field, the humanist principle is that the customer is always right, that people go to the supermarket or to the market, and they buy stuff according to their own feelings, what they want. This is the humanist approach to economics, in contrast to a totalitarian approach, like in communism, that you have a government telling you what you need to have or what you are allowed to buy.

[00:12:50] And similarly, in the field of art, the principle of humanism there is, beauty is in the eyes of the beholder. What is beautiful artistically is determined by our feelings. There is no outside authority that can decide for us what is beautiful, what is good out, what TV we should watch, or whatever. And finally, if you look at the field of morality, of ethics, so throughout history, you had many ethical systems that claimed that it was some false outside humanity that tells us what is good, what is right, how we should behave. And the humanist idea is that no, the ultimate judge, even in the field of ethics, is human feelings. That it doesn't mean simplistically just do whatever you want. It means that the way to judge whether an action is good or evil is its impact on the feelings of human beings and maybe other sentient beings. If something causes harm, which means it causes suffering, it causes pain, it causes sadness, this is bad. If something does not cause harm, then it's okay.

[00:14:03] So if you take something like, you know, homosexuality, so you had for centuries people saying that this is a sin. Why? Because the Bible forbids it or because God forbids it. And then you had humanists coming and asking, "It doesn't matter what some book says, we want to know who is harmed by it. If two men love each other and they feel good about it and their love doesn't harm anybody, why should this be a sin?" And from this perspective, humanism comes and says, "No, it's not a sin. It's perfectly okay." The yardstick is always human feelings. Of course, there are complicated cases. What happens if, I don't know, I steal your car and I feel good about it, and you feel bad about it. So we need to weigh different feelings, one against the other. And so you have a lot of complicated ethical debates in humanism, but the arguments on both sides are always in terms of human feelings, not in terms of some outside force, like a God that comes and tells you irrespective of human feelings, what is good and what is bad.

[00:15:14] So this is kind of humanism very briefly. And now all this is put into question to some extent, at least by the rise of artificial intelligence that is increasingly deciphering us and also makes decisions about us on the basis of a completely different logic. Now, previously in history, all the tools we invented always empowered us because no tool was capable of making decisions independently. If you invent a stone knife so you can use the knife to murder somebody, you can use the knife to cut salad, you can use the knife to save somebody in surgery, but this is your decision. The knife cannot decide what to do with it. And it's the same with an atomic bomb or nuclear energy, let's say more broadly. Nuclear energy can be used to produce electricity cheaply, which is good. It can be used to destroy human civilization, to bomb cities and countries. But the decision is not in the hands of a bomb. A nuclear bomb cannot make decisions. It's always a human being deciding to push the button or not.

[00:16:24] Now, AI is different. It's the first technology ever that we invented that can actually make decisions by itself, and therefore it takes power away from us, and it is increasingly making more and more decisions about our lives, whether to give us a loan, whether to accept us to a job, or to university. Even sentences in court are increasingly influenced or even given by AI. We increasingly don't even understand how the AI reaches its decisions. One of the biggest problems in the field of AI, many people are working on it, so maybe we'll solve it at some point, but at present, it's still a very huge problem, is explainability.

[00:17:07] That the AI, let's say you apply to a bank to get a loan, the bank says no. You ask, "Why not?" And the bank says, "We don't know. The AI, the algorithm said no." And people are working on explainability on being able to explain why the AI said no. But there are some very, very difficult problems. It might ultimately be impossible to explain to humans the decisions of AI because we think in one way and AI makes decisions in a completely different way than human beings, that we might just not be able to grasp it.

[00:17:46] Jordan Harbinger: You are listening to The Jordan Harbinger Show with our guest Yuval Harari. We'll be right back.

[00:17:51] This episode is sponsored in part by SimpliSafe. You probably know by now how much I sing the praises of SimpliSafe, which snagged the coveted title, Best Home Security of 2023 by US News and World Report. SimpliSafe's constantly innovating gadgets to safeguard you and your family. Their latest little marvel is two in one smoke and car monoxide detector. This little bad boy can tell the difference between fire smoke and your slightly overdone toast, so no more of those false alarms. But you still get your home safeguarded. You can enjoy your culinary adventures and misadventures without setting off unnecessary sirens and having to explain yourself to the fire department. SimpliSafe's ability to be controlled remotely means we can arm or disarm our system from anywhere. We can check in on our home while we're basking in the sun in a tropical vacation, which let's admit it, I desperately need at this point. We have total peace of mind having SimpliSafe's squad of vigilant professionals ready to leap into action. If an emergency arises, they're prepared to send the Calvary, Police, Firefighters, EMTs right to our doorstep. My elderly parents, sorry, mom, are moving here soon, and we plan to install SimpliSafe as a safety net to keep tabs on them as well. Feeling a little DIY vibe, great, SimpliSafe is a breeze to set it up yourself. Jen did it all. I highly recommend that. But if you'd rather kick back and let somebody else, as in not your wife, do the dirty work, let a certified technician do it. So don't wait for a rainy day to safeguard your home with financing through Affirm, you can start protecting your castle today and pay over time crafting a plan that fits comfortably into your budget. Right now, get 20 percent off your new system when you sign up for Interactive Monitoring, visit simplisafe.com/jordan. That's simplisafe.com/jordan. There's no safe like SimpliSafe.

[00:19:27] This episode is also sponsored by Shopify. Are you an entrepreneur always on the rollercoaster side of running your own biz? Then you'll know this delightful — isn't just noise. It's a fist pump movement validation. You're blazing the right trail. Each ka-ching is the sweet sound of success, a signal you've made another sale on Shopify. Each sale is a leap towards transforming your business dreams into a pulsating, thriving reality. All thanks to Shopify, the commerce platform revolutionizing millions of businesses worldwide. Whether you're purveying handcrafted java or avant-garde wall decor, stylish athleisure, or organic beauty elixirs, Shopify simplifies your selling experience both online and offline. Shopify's like a Swiss army knife of sales channels, in-person, POS system, all-encompassing e-commerce platform, even avenues to sell across TikTok, Facebook, Instagram. And the best part, you don't need a degree in coding or design. They're there to help 24/7. So give Shopify a world today.

[00:20:17] Jen Harbinger: Sign up for a one-dollar-per-month trial period at shopify.com/jordan in all or case. Go to shopify.com/jordan to take your business to the next level today, shopify.com/jordan.

[00:20:29] Jordan Harbinger: If you're wondering how I managed to book all these amazing folks every single week, it is because of my network. And I know network is a gross word, but I'm teaching you how to build your network for free over at jordanharbinger.com/course. This course is about improving relationship-building skills in a non-cringey, non-gross, non-cheesy way. These are just practical exercises that are going to make you a better connector, a better colleague, a better friend, a better peer, and many of the guests on the show subscribe and/or contribute to the course. So come join us, you'll be in smart company. You can find the course at jordanharbinger.com/course.

[00:21:03] Now, back to Yuval Harari.

[00:21:06] I think that's likely true. I mean, think about asking a human to explain why they made a decision. You're not going to get the truth. "Why didn't you like her or that girl you went out with on a date?" "I don't know. She was just a little bit, I can't put my finger on it." Or you might say, "You know what? I just wasn't attracted to her. She's very sweet. But that's it." But then if you were to deconstruct the whole evening and every message we'd sent, well, probably through AI, it would be like, "When she did this, it signaled this, and then your brain subconsciously thought these other things and then it reminded you of your aunt who you don't like and therefore you don't want to see her again." But you'll never come up with that answer consciously.

[00:21:41] Yuval Noah Harari: Yes.

[00:21:41] Jordan Harbinger: You would never do it yourself in a million years.

[00:21:43] Yuval Noah Harari: Absolutely. Again, it's so difficult for us to understand how our own brain works and makes decisions. It's even more difficult when it comes to a non-human type of intelligence.

[00:21:54] Jordan Harbinger: So these systems not only know what we want, essentially more than we know what we want at least consciously.

[00:22:00] Yuval Noah Harari: Mm-hmm.

[00:22:00] Jordan Harbinger: But they can go further, right? They can program us to want things. And social media, as rudimentary as that AI is now, it seems like they're kind of on the way to designing our thoughts and desires in a way that will eventually supersede us as decision-makers entirely.

[00:22:16] Yuval Noah Harari: Yeah. You know, in social media, we had very, very primitive AI because in social media, all the content that we consumed, all the videos and texts and whatever, it was produced by human beings. The only thing the AI did, the algorithms and social media did was to curate the content to decide which video to show which person, when. And even though it's, again, it's a very primitive thing, it still had such an immense impact on our society and politics. At least to some extent, it is behind a lot of the political crises we now see in the world. Because the algorithms in many of the platforms, Facebook, TikTok, whatever, were given quite a simple job. Nobody told the algorithms, at least in most cases, create political polarization. They were simply given the task of maximizing the time people spend on the platform.

[00:23:14] It was a battle for attention, how to keep people on our platform for 50 minutes a day instead of 40 minutes a day. And the algorithms by trial and error, they discovered that if they show people content that, for instance, makes them angry or hateful or fearful, this tends to hook their attention and keep them on the platform. So inadvertently, the AI was influencing not just the opinions of individuals, but the entire atmosphere in society. Like feeding millions of people with a lot of hatred and anger and fear every day. And we now see the consequences.

[00:23:57] The new generation of AI can go much, much further than these primitive social media algorithms because they can actually create the content and they cannot just create the content, create fake news stories, create conspiracy theories, create fake videos. You can take any politician or you and me and now make us do and say almost anything you want. You can create a deepfake. What is even worse in many ways is that because of its new linguistic abilities, the new generation of AI like ChatGPT and GPT-4, they can create intimate relationships with human beings. They don't have consciousness of their own. They don't have feelings and emotions of their own. They don't feel anything. But through their mastery of language, they can make us connect to them, feel attached to them. We can now have the tool to mass produce intimate relationships. And in the field of persuasion, if you want to change somebody's political opinions, if you want to change to make them buy some product, the most powerful weapon in the arsenal is intimate relationships.

[00:25:14] You know, if you read something in a newspaper, that may not change your mind, but if you have an intimate relationship with somebody and they say something, or over many days and weeks, they kind of drip a certain message, this is extremely powerful. And now AI can do that. You're maybe interacting with somebody online that you think is a real human being. You are having a conversation with them about all kinds of things. Maybe you even talk over Zoom. You see it on video, and you think it's a real human being and you become attached to them and you care about them, but it's actually an AI.

[00:25:52] Jordan Harbinger: Are you dropping a hint right now? Is this real? Are you really there? Are you watching secession and I'm talking to your representation?

[00:26:00] Yuval Noah Harari: That's the question that we'll increasingly be asking. Is it really a human being? Now, you know, two, three years ago, it was very easy to tell that if you read a text, it's obvious to you if this text was written by a human being or by a computer because there was a certain level of sophistication and coherence that machines were simply incapable of. I think everybody had the experience in the last two weeks or last two, three months to read a text by say, ChatGPT and say that this is it. I'm no longer capable of being sure whether texts are generated by a human or by an AI.

[00:26:40] Today, I think there was this big headline about a prize in photography given to an image which people thought was taken by a human being, but it was actually generated by an AI and this will increasingly happen with conversations. It's one thing to create a text, it's another thing to have an ongoing, interactive conversation. Add to that video and sound, how to mimic the body language, the facial expression, the tone of voice, this is even more complicated, but we are getting them. So we are very close to the point when you are now having this conversation with me, and you cannot be absolutely sure that you are not actually talking with an AI.

[00:27:24] Previously, in history, if you wanted to do a propaganda campaign or an influence campaign and talk with people, so you had, like before elections, you would send volunteers to knock on doors and try to have conversations with people. It was so expensive because you need so many volunteers, and it's inefficient because you have a conversation of say, five minutes, 10 minutes. It's not an intimate relationship. But think about the possibility that with the new technology, you can mass produce intimate relationships with millions of people. Like you had these bot farms, bot armies, so now the bot armies can also mass produce intimacy.

[00:28:06] We've never seen anything like it in history and it's especially dangerous for democracies. Because democracy in the end is a conversation. That's what it is. It's lots of people having a conversation about, you know, the big issues of the day, how to deal with climate change, what to do about abortion, what to do about gun control. So we have a conversation. Once AI hacks human language, it can basically break down the conversation. What happens if you spend hours talking with somebody you think is a human being, but it's actually an AI. The conversation breaks down. Now, for autocratic regimes, this is good news, but for democracies, This is an existential danger.

[00:28:53] Jordan Harbinger: This is like the political version of that Joaquin Phoenix movie, Her, where I think Scarlett Johansson is in AI, and he falls in love with her and she's like, I think towards the end, spoiler alert here, he says, "How many people are you talking to?" And she's like, "14,500,783."

[00:29:10] Yuval Noah Harari: Yeah.

[00:29:10] Jordan Harbinger: And he's like, "Oh." And it just breaks him, right? Because—

[00:29:14] Yuval Noah Harari: Yes.

[00:29:14] Jordan Harbinger: —he realizes that he's just like a, not even a number on a spreadsheet at that point.

[00:29:18] Yuval Noah Harari: Yeah.

[00:29:18] Jordan Harbinger: What other new technology trends worry you? Like genetic engineering, for example.

[00:29:24] Yuval Noah Harari: It is worrying, but it's developing on a much, much slower timescale, slower pace. Because anything that has to do is biology, it moves far more slowly. Like if you now make a change to human DNA and you want to see the results, it takes years because, you know, giving birth to a baby and then they have to grow up and it takes decades for just one iteration of trying to change people through genetic engineering. Anything that has to do with the world of information and culture, it's far, far faster. I do think that biotechnology also has a lot of both positive and negative potential to completely change history. But I'm not sure if we have the time when AI is going to completely change the course of history within the next, I don't know, 10 years. Genetic engineering, it'll take decades or even centuries, so we probably just don't have time.

[00:30:23] Jordan Harbinger: Right. It's like the least of our concerns is something that's going to happen in 200 years, if we, in the next 20 years, are going to have a completely different artificial intelligence landscape.

[00:30:33] Yuval Noah Harari: One danger that we should take into consideration is, of course, genetic engineering, not of humans, but you know, viruses or pathogens.

[00:30:41] Jordan Harbinger: Sure.

[00:30:42] Yuval Noah Harari: Or things like that, that today you can basically print, you can just write code, and even AI can write a code for a new virus, print it out, and you have a new epidemic. So these are things that we should be concerned about. But with regard to changing humanity itself, I think that information technology is just moving much, much faster than biotechnology.

[00:31:05] Jordan Harbinger: The thing that worries me, and again, maybe I don't need to be worried about that anymore. The thing that I guess scares me about genetic engineering is if you change the human genome to get rid of something, you can't just maybe get it back very easily. I'm speaking as a layman here, so maybe I'm way, way off, but I think what if we engineer everybody to be super, super intelligent, but somehow that lowers empathy?

[00:31:28] Yuval Noah Harari: It's very likely.

[00:31:28] Jordan Harbinger: Well, how do we get the empathy back? We don't maybe ever.

[00:31:31] Yuval Noah Harari: We don't. That's very, very dangerous because even we don't understand ourselves very well. We don't understand our body, how it functions, our brain, our DNA. It's extremely complicated and like you just said, I mean most traits, they are not the result of a single gene. They are the result of a combination of many genes and many processes in the body, which have a lot of other effects. So it's extremely likely that if we try to intentionally kind of re-engineer ourselves to be more intelligent, even if we succeed, there will be a lot of unintended consequences, like loss of empathy, which could be terrible.

[00:32:14] So this is why I think it's also actually dangerous to allow these kinds of technologies or powers to narrow-minded organizations like, I don't know, armies and corporations, which, you know, armies would like to have more discipline and intelligent soldiers, even if it comes at the expense of making people less empathic, or destroying human spirituality. So we have to be extremely careful about not allowing armies and corporations, and governments to acquire these kinds of abilities.

[00:32:46] And you know, if you look back in history, you see all kinds of examples of how people always had visions to re-engineer humanity, and it usually ended badly. You know, to give just one example, in many ancient kingdoms and empires, kings and emperors, they're always afraid that their ministers or generals would depose them and replace and establish a new dynasty. This was the biggest headache of every Chinese emperor and every caliph was what if my own ministers or my own general would rebel and depose me and take the throne? So one solution that many of these autocrats found was to use biotechnology. Thousands of years ago to create a new kind of human. And this was castration. You take the general or the minister or whatever, and you cut off a certain part of the male body and problem solved. This person, maybe they can still rebel against me, but they can't establish their own dynasty.

[00:33:56] So you see many ancient kingdoms and empires that eunuchs were used to man the bureaucratic administration, and even some of the high-ranking positions in the Army and Navy. Like before Columbus, the greatest naval expedition in history, a Chinese expedition, which reached all the way to Africa, was led by Admiral Zheng He, who was a eunuch and again, from the viewpoint of the emperor of the Chinese emperor, this is great. This person is very unlikely to rebel against me and try to establish their own dynasty because they can't establish a dynasty. Of course, of the viewpoint of the individual in question, it was not such a great idea. But yeah, I mean, for centuries in a place like China, if you want a government job, they tell, "You wonderfully, you want a government job, please, but we just need your testicles and the job is yours.

[00:34:53] Jordan Harbinger: You might not make it through the interview process, though, unfortunately, with the medical technology of the day.

[00:34:58] Yuval Noah Harari: Yes.

[00:34:58] Jordan Harbinger: You know, but still better, maybe still better than starving to death on the streets of Beijing or whatever, back in ancient China.

[00:35:05] You'd said something on 60 Minutes that really freaked out, our boy, Anderson Cooper, something along the lines of, "Within a century or two, the Earth will be populated by beings as different from us as we are from chimpanzees." And that was, that's a hell of an assertion.

[00:35:20] Yuval Noah Harari: Yeah.

[00:35:20] Jordan Harbinger: What do you mean by that? What's happening with society in 200 years, potentially?

[00:35:24] Yuval Noah Harari: It's more about technology than about society. The combination of biotechnology on one side and artificial intelligence on the other side means that if we don't destroy ourselves in the next century or two, we are very, very likely to use these technologies to either change ourselves, our own bodies, and brains and minds, to such an extent that these future entities will be more different from us than we are different from Neanderthals. You know, the only difference between us and Neanderthals is just a few genetic mutations, which led to changes in brain structure and hormonal system and so forth. So we now acquire the technology, maybe not in the next 10 years, but in the next hundred years of making even bigger changes intentionally to our DNA to our bodies, to our brains. And in addition, we have the breakthrough in AI, which could lead either to combination of organic bodies with computers, which are known as cyborgs or to the creation of completely inorganic entities, completely inorganic beings that could be far more intelligent and capable than us. And you know, it's difficult even to imagine what these beings would be like because our own imagination is the product of organic biochemistry. It's the product of our own organic brain, and it's very difficult for us to even imagine what an inorganic entity, an inorganic being would look like or would be capable of doing.

[00:37:07] You know, as organic creatures for instance, we are used to the situation that you are always in one place in space and all the parts of your body must be connected to together for you to be alive. So I'm now in Tel Aviv. I can't be at the same time in Chicago or in Sydney, but this is just organic beings. If you talking about an inorganic entity or a cyborg, these limitations collapse. And an algorithm or an AI entity, it can be in many places at the same time. And a cyborg could also be spread over space. And this experiment has already been done, you can today connect a bionic hand to a brain of a human, and you control the hand with just your brain, but the hand doesn't need to be attached to your body. You can control the hand by remote control, even if it's in another room or another city or another continent. So even these very fundamental things like being in one place at one time, which we think this is obvious, all life forms obey these laws. This may not apply to the future entities, which might control the planet in a century.

[00:38:31] Jordan Harbinger: And it makes sense for humans to build this kind of thing because I'm thinking of a medical application, right? If I have brain cancer, god forbid, right? Knock on wood. And the best surgeon for that is in Israel. Maybe I can't travel, maybe I just don't even need to. I go to the hospital in New York or somewhere in LA and the guy in Israel cracks his knuckles before planning to go to watch the next Succession season 500 episode. And he just gives me the brain surgery from whatever tech deck he's using and doesn't even need to wash his hands because he is, you know, whatever. He is touching the same gloves he uses every day.

[00:39:04] Yuval Noah Harari: Yeah.

[00:39:04] Jordan Harbinger: I get life-saving surgery. And he at the end, you know, goes and gets a massage and watches some TV or whatever the equivalent is in 50 or 100 years. And it's just routine. And I just got the best surgery of my life from a human. Or if maybe we don't even need humans at that point to do that kind of thing.

[00:39:23] Yuval Noah Harari: Mm-hmm.

[00:39:24] Jordan Harbinger: But just the idea that we could get medical care or connect with somebody at that distance is really, really something incredible. People could design really dangerous things without getting anywhere near them.

[00:39:34] Yuval Noah Harari: Yeah.

[00:39:35] Jordan Harbinger: Nita Farahany, episode 810 of this show, she was concerned that AI-enabled humans essentially will widen the gap between the haves and the have-nots to a degree that humanity is never seen. And I think—

[00:39:47] Yuval Noah Harari: Yes.

[00:39:47] Jordan Harbinger: —imagine you can afford to get an AI-enabled brain implant for your kids. I can do the same, but other people can't where I grew up near in Detroit. The difference in capabilities would probably be like an office worker who has a computer with Internet versus an office worker who doesn't have lights or electricity. It could even be a greater gap than that.

[00:40:04] Yuval Noah Harari: Yeah, I think that. We are getting close to a point in history when it will be more and more likely that economic differences will get translated into real biological differences, which previously in history was true to some extent. Like the rich had better food, so they grew taller then and they had other advantages, but basically, they were still the same human beings. One of the dangers we are facing now with the new technology is that if we don't make sure that everybody benefits, then we might see the greatest inequality ever emerging because of these new technologies. This is certainly a very, very big danger.

[00:40:52] Jordan Harbinger: Algorithms being able to tell our sexual orientation. This was something you mentioned, I can't remember where, I think it might have also been on 60 Minutes, but it's like at first when you're talking about this, it sounds like a fun party game and then you realize that it's going to end up with a bunch of people getting murdered by an authoritarian government.

[00:41:09] Yuval Noah Harari: Yeah.

[00:41:09] Jordan Harbinger: Take us through this because I think a lot of things with algorithms start with this is going to be so convenient and they end with, "Wow, I did not see that coming."

[00:41:17] Yuval Noah Harari: Yeah. I think about my own life. So I came out as gay when I was only 21, and I often think about my life when I was, I don't know, 14 or 15. Now, it should have been obvious to me when I was 15 that I was gay because looking back, I was far more interested in guys than in girls even back then. But I didn't realize it. I grew up in a very homophobic society. So you had a lot of kind of self-repression. And even though the signs are there, you repressed, you don't know. And this is something very, very human. There are many things we don't know about ourselves. Sometimes we don't know about ourselves, the most important things in life. But an algorithm could have told me or could have discovered without telling me that I was gay when I was 15 very easily. There are so many ways to do it. One way, which is today, technically possible, is simply by tracking eye movements that—

[00:42:14] Jordan Harbinger: Mmm.

[00:42:15] Yuval Noah Harari: —you know, I don't know, I'm walking down the beach and there is a sexy guy and a sexy girl. Where do my eyes go? And where do they linger? This is something which is, you know, usually, it's not even under your control. And today, a computer can track your eye movements and can know this even before you know it about yourself. In some scenarios, it could be used in a good way to help people understand themselves and come to terms with themselves and so forth. But they're also quite disturbing usages for this kind of technology. One thing, for instance, is corporations using it to manipulate us. So for instance, I don't know, Coca-Cola discovering when I'm 15 that I'm gay before I know it about myself.

[00:43:06] Jordan Harbinger: Mm-hmm.

[00:43:06] Yuval Noah Harari: And deciding which commercials to show me. Like they, they'll show me the commercial with the sexy guy and not with the sexy girl. But there are much of course, worse scenarios like Uganda recently legislated the law, which inflicts the death penalty on homosexuality. So what would the Ugandan government or the Iranian government or the Russian government do with algorithms that are able to detect people's sexual orientation? This is a frightening scenario, which could become real quite quickly. I think more broadly, what we are looking at is the possibility of building the worst totalitarian regimes in human history.

[00:43:51] Because if you look at the 20th-century at regimes like Nazi Germany or the Soviet Union, they wanted to know everything about those citizens. They wanted to follow everybody all the time, but they couldn't because the technology was not there. You know, in the Soviet Union, you have 200 million citizens, more or less. You don't have 200 million KGB agents that can follow each and every person 24 hours a day. And even if you do, you know, in the days of Stalin or Brezhnev, a KGB agent that follows you at the end of the day, writes a paper report about everything that you did and said and everybody you met and whatever, and then they send this paper report to Moscow and every day, imagine that KGB headquarters gets 200 million paper reports. Nobody's able to read it and analyze it. Now, it is becoming possible. You don't need human agents to follow people around. You have all these cameras and microphones and so forth, and you don't need human analysts to make sense of the data, you have AI. So the neo-totalitarian regimes of the 21st century, they could be much worse than the Soviet Union. They can actually follow everybody all the time, and, for instance, discover things about you that you don't know about yourself, like you're a sexual orientation when you're a teenager.

[00:45:19] Jordan Harbinger: This is The Jordan Harbinger Show with our guest Yuval Harari. We'll be right back.

[00:45:23] This episode is sponsored in part by Better Help. Are you stuck in a whirlwind of others' needs, work on top of taking care of the kids, elderly parents and your partner? Zero time to yourself. To put it dramatically, you're basically hosting an all-you-can-eat buffet of giving, and now you're just a squeezed-out lemon or worse charred toast. Jen can relate. Therapy can be your secret weapon, helping you grab the reigns and find equilibrium in your life. It's like having a personal coach training you to support your loved ones while ensuring you are not burned out in the process. Don't be left hanging out to dry while everybody else is taken care of. It's time to get your needs in the mix too. Therapy isn't just for those who've been through major hurdles or traumas. It provides a tuneup for the daily grind, the rollercoaster that we call life. So whether you've been through a tsunami or a drizzle, therapy definitely has your back. I know a lot of people say like, "Oh, online therapy can't be the same. It's just phone. It's not as good as in-person." Trying to get an in-person therapist is really tough, especially right now because therapy is in demand. I highly recommend Better Help. Dip your toes in those therapy waters. You zip through a quick questionnaire, you get paired with a licensed therapist tailored to your needs, and if you don't click, you can get another therapist anytime. No extra charge.

[00:46:26] Jen Harbinger: Find more balance with Better Help. Visit betterhelp.com/jordan to get 10 percent off your first month. That's better-H-E-L-P.com/jordan.

[00:46:36] Jordan Harbinger: This episode is sponsored in part by Airbnb. So we used to travel a lot for podcast interviews and conferences and we love staying in Airbnbs cause we often meet interesting people in the States are just more unique and fun. One of our favorite places to stay at in LA is with a sweet older couple whose kids and moved out. They have a granny flat in their backyard. We used to stay there all the time. We were regulars, always booking their Airbnb when we flew down for interviews. And we loved it because they'd leave a basket of snacks, sometimes a bottle of wine, even a little note for us, and they would leave us freshly baked banana bread because they knew that I liked it. And they even became listeners of this podcast, which is how they knew about the banana bread. So after our house was built, we decided to become hosts ourselves, turning one of our spare bedrooms into an Airbnb. Maybe you've stayed in an Airbnb before and thought to yourself, "Hey, this seems pretty doable. Maybe my place could be an Airbnb." It could be as simple as starting with a spare room or your whole place while you're away. You could be sitting on an Airbnb and not even know it. Perhaps you get a fantastic vacation plan for the balmy days of summer. As you're out there soaking up the sun and making memories, your house doesn't need to sit idle, turn it into an Airbnb. Let it be a vacation home for somebody else. And picture this, your little one isn't so little anymore. They're headed off to college this fall. The echo in their now empty bedroom might be a little too much to bear. So whether you could use a little extra money to cover some bills or something a little more fun, your home might be worth more than you think. Find out how much at airbnb.com/host.

[00:47:59] This episode is also sponsored in part by NetSuite. Do you have a business that generates millions or tens of millions in revenue? Well, smell you, but you'll want to pay attention because NetSuite by Oracle has just rolled out the best offer we've ever seen as a business owner. I know firsthand that managing disparate systems will hinder growth and efficiency. I used to build stuff myself and felt pretty good about it. But look, if your accounting system doesn't talk to your CRM and doesn't talk to your sales, your customer relationships, and all that jazz, you just don't have a clear picture of your overall business performance. NetSuite's cloud-based platform can bring your financials, inventory, sales, customer relations, all into a single unified platform. It's like one big old dashboard system, everything all in one place. Left hand talking to the right hand, so you finally know what everything is doing. Helps your business become more agile, responsive, competitive. This is why they're number one. And for the first time in NetSuite's 25 years as the number one cloud financial system, you can defer payments of a full NetSuite implementation for six months. That's no payment. No interest for six months. Take advantage of the special financing offer today. 33,000 companies have already upgraded to NetSuite, getting visibility and control over their financials, HR, e-commerce, and more. I used to use this in one of my previous companies. It was amazing. Highly recommend it.

[00:49:09] Jen Harbinger: If you've been sizing NetSuite up to make the switch, then you know this deal is unprecedented, no interest and no payments. Take advantage of this special financing offer at netsuite.com/ordan, netsuite.com/jordan to get the visibility and control you need to weather any storm, netsuite.com/jordan.

[00:49:28] Jordan Harbinger: If you like this episode of the show, I invite you to do what other smart and considerate listeners do, which is take a moment and support our amazing sponsors. All the deals, discount codes, and ways to support the show are at jordanharbinger.com/deals. You can also search for any sponsor using the AI chatbot on the website at jordanharbinger.com/ai. Thanks so much for supporting those who support the show.

[00:49:49] Now for the rest of my conversation with Yuval Noah Harari.

[00:49:54] It is terrifying, I think of North Korea and—

[00:49:57] Yuval Noah Harari: Yeah.

[00:49:57] Jordan Harbinger: —imagine if every time you have to say a poem about Kim Il-sung or whatever, or every time you look at the photos that are up everywhere, the giant paintings it, there's some people who are going to go, "Wow, I'm so reverent for our Dear Leader." And other people are going to be like, "Oh, this fricking crap again." And if they can find out the people that are thinking, "Oh, this fricking crap again," they can throw them into reeducation before that person even knows that they're sick of revering Dear Leader.

[00:50:22] Yuval Noah Harari: Yeah.

[00:50:22] Jordan Harbinger: Or just get rid of them, which is even more terrifying. But either way, you end up with totalitarian control. And you're right, it is really terrifying. Especially because often it comes in the form of a game, you play with your friends at a party on Facebook that says, "All right, everybody look, at the camera and it's going to flash some pictures, and then we're going to find out if you're gay." And everyone's like, "Yay, that's going to be hilarious." And then, it's not at all, right? It's terrifying.

[00:50:45] Yuval Noah Harari: Yeah.

[00:50:45] Jordan Harbinger: Because you found out you were gay from Facebook, even though you didn't know already, which would be really not a place I'd want to find that out at someone's birthday party.

[00:50:53] Yuval Noah Harari: Exactly. It's a very important moment in life. It's an important process, usually not a single moment. And you need to do it in the right way.

[00:51:02] Jordan Harbinger: Reading some of your books. It looks like most of human history was essentially one war or conflict after another, hot or cold. And that peace was kind of just the absence of war.

[00:51:13] Yuval Noah Harari: Mm-hmm.

[00:51:14] Jordan Harbinger: And it really seems like Putin's invasion of Ukraine has shattered that illusion for, at least for my generation, where it's like, "Oh, we find the Cold War's over. Look at our lives. They're going to be so sunny and bright." It just reminded us that the barbarians really are just outside the castle walls. The jungle is still right outside. Aside from increased defense spending and maybe lower spending on everything else is a result, what do you think the macro effect of this is going to be?

[00:51:39] Yuval Noah Harari: The macro effect is that we have all these existential threats we need to deal with as humanity. It's the technological threat we've just been discussing at length, the rise of AI and biotechnology and all the implications, and we have the ecological threat. And instead of uniting to deal with these threats together, we are just creating another existential danger of the Third World War that seemed like an almost impossible scenario, say 10 years ago, but now is increasingly looking like a very likely eventuality, even if we don't have a hot world war, a Third World War, if we don't unite to deal with AI on the one hand and the ecological crisis on the other, we can't solve these problems. Individual nations by themselves, they cannot stop climate change. We need some global agreement on it. Even more so with regard to regulating AI, countries that don't trust each other will not be able to regulate this disruptive technology, so we'll get into an arms race in AI and arms race in biotechnology and an arms race almost guarantees the worst outcome.

[00:52:55] Every side, I don't know, if you think about creating killer robots, weapons that are autonomous and can decide by themselves who to shoot and who to kill. This is obviously a very, very dangerous technology. Every country would say, we don't want to do it. It's obvious that this is a very dangerous step, but we cannot allow ourselves to remain behind. We can't trust the Russians, the Israelis, the Chinese, whoever, not to do it first. So we must do it before them. And the other side would say the same thing, and then you'll have an arms race. So it's going to develop first the most effective and lethal killer robots. And then everybody knows it's a bad idea and still everybody does it. That's the logic of an arms race. And the only way to stop it is through cooperation. But when you have Putin invading Ukraine, and it when again, becomes a norm in the international system, that one country just tries to destroy its neighbors because it can then trust collapses. And there is no way that we can stop the arms race or reach some agreement on regulating dangerous technologies.

[00:54:08] Jordan Harbinger: It's essentially the war in Ukraine kind of a war for the norm that you can't just invade your neighbor. It seems like—

[00:54:15] Yuval Noah Harari: Yes.

[00:54:15] Jordan Harbinger: I don't want to put words in your mouth, but it's kind of like if we defeat Putin in Ukraine, then it says, "Hey, trust is more important here. You can't do that. Everybody who's thinking about doing that, don't do it because we will you unite and face you down. It's going to be the end of your regime or you're just going to be in the meat grinder."

[00:54:33] Yuval Noah Harari: Yeah. I mean, you know, for thousands of years, this was the human norm. Humans lived in the jungle for thousands of years, whether you live in ancient Greece or ancient China, or medieval Europe, or the 19th century, you know, that at any moment, the neighboring tribe, the neighboring kingdom, the neighboring country might invade and conquer. Everybody knows it. This is kind of the basics of history or the basics of international relations. And over the last few decades, it changed. It became unacceptable for one country to invade and annihilate a neighbor just because it can. We still had lots of war, but of a different kind. You had a lot of civil wars, you had a lot of internal conflicts. But since 1945, there has not been a single case of an internationally recognized country, which is just wiped off the map because a stronger neighbor invaded and conquered it. And this was reflected, for instance, in a decline in military budgets because all countries felt much safer. And now, this new norm is broken, and if Putin gets away with it will return to the jungle, basically. And countries all over the world will know that this is again, possible.

[00:55:55] You know, it's like in a school when some bully beats up a kid in the yard and everybody is kind of forming a circle to see what happens. If the bully is stopped and punished, so, you know, "Oh, you can't do these things, you can't behave like that." But if the bully gets away with it, then every kid in the school knows that, this is the new norm of the school. It can also happen to me. So, I need to take care of myself and taking care of myself in terms of international relations, for instance, means increasing your military budget or entering into military alliances, which of course, impacts your neighbors because if you increase your military budget, they now feel less safe. So they increase their budget and you have this arms race spiral again, which ultimately harms everybody.

[00:56:45] Jordan Harbinger: Do you think we're likely to see an increase in the proliferation of nuclear weapons? I mean—

[00:56:49] Yuval Noah Harari: Yeah.

[00:56:49] Jordan Harbinger: A lot of people are saying, well, Ukraine gave up their nukes in exchange for security and that didn't really work.

[00:56:55] Yuval Noah Harari: Absolutely. Again, if you live in the jungle, you want to have the biggest teeth. Certainly when you think about the history of Ukraine that after it gained independence, it voluntarily gave up its nuclear weapons in exchange for these promises of protection, both from Russia and from the United States and the Western powers. And then it was innovated by Russia and the Western powers said, "Okay, we are not going. We are helping in many ways, but we are not sending our armies to help you." So all other countries in the world, again, are watching this. And the conclusion is, if we have nuclear weapons, don't give them up. No matter what guarantees you get, they won't be worth much in, in times of travel and the countries that don't have nuclear weapons. At least, some of them are likely to want to get some.

[00:57:46] If you think about even, I don't know like the post-war arrangements after 1945, so both Germany and Japan, even though they had the ability to acquire nuclear weapons, they certainly have the technological and the economic resources necessary to produce nukes. They gave up this option trusting in the United States to a large extent, to provide them with a nuclear umbrella. Now, what happens, for instance, if in 2024 or 2028, a new US president is elected, who opts for a very isolationist foreign policy and basically Germany or Japan or other countries can no longer rely on the Americans to provide them with security? So even though it's present, it seems almost unthinkable that Germany or Japan would go nuclear, depending on, you know, the outcome of the next US elections, this can be a real development.

[00:58:48] Jordan Harbinger: That is scary regardless of what you think about who's in charge of those nukes, right? Just proliferation in general is always should give everybody some pause.

[00:58:57] Yuval Noah Harari: Absolutely.

[00:58:58] Jordan Harbinger: I've heard you say that if Putin waited 10 more years, maybe he might have gotten away with invading Ukraine.

[00:59:03] Yuval Noah Harari: The West would've collapsed, yeah.

[00:59:04] Jordan Harbinger: There you go, yeah, I was going to say, you didn't say the west would've collapsed, but there we go.

[00:59:07] Yuval Noah Harari: If you look at the culture war within the West, within the United states, within European countries, and the impression is, if Putin and just left the West alone to, you know, go down with this culture war, he could have then done whatever he wanted. And I think that the biggest threat to the West, and to a large extent, even to the peace of the world, is internal. Because the Western powers are still by far the most powerful on the planet. You look both militarily and also culturally and economically, if Western democracies stand together, they are much more powerful than Russia. They're even significantly more powerful than China. You know, the Russian GDP is about the same as Italian GDP. In economic term, if you take Belgium and the Netherlands together, that's Russia.

[00:59:59] Jordan Harbinger: Wow.

[00:59:59] Yuval Noah Harari: The West biggest problem is internal, is this culture war, which is tearing the West apart. And you know, this is extremely unfortunate because I think basically almost all sides in the culture war, they agree on the same basic values, on the same basic worldview. Looking from outside, it's really difficult to understand what the big fight is all about. You know, looking from outside, the difference, for instance, between Republicans and Democrats in United States doesn't look very significant. They all agree on basic values like freedom, like democracy, like equality. If we talked earlier about humanism, you know, for most of history, so people believed in the divine right of monarchs and the big political battle in the 19th century for instance, was between people who believed in the divine rights of kings to rule and the will of the people Democratic elections. This is no longer Democrats and Republicans are in exactly the same camp. They both believe in democracy in the will of the people. It's the same with the economy. They both believe in the free market. Yes, they have some, you know, from a circle perspective, relatively small differences in the level of taxation they want. But it's not like in the early 20th century where you have a communist camp that wants to completely abolish private property. Nobody's talking in these extremist terms. So economically, also the difference between Democrats and Republicans is not so big. Even if you look at things like, I don't know, gay marriage, so Republicans today hold much more liberal views than Democrats held 50 years ago.

[01:01:45] Jordan Harbinger: Or 20 years ago, I think, wasn't even Hillary Clinton was like, "Well, I don't know," and that was like five minutes ago in a historical timeline.

[01:01:52] Yuval Noah Harari: So it seems that people are taking certain flashpoint issues which are important in themselves of, of course like abortion, like gun control, like transgender rights, and in a way, you know, kind of weaponizing them and taking them to extreme in order to create this political polarization, which looked at from a broader perspective, there just seems such a mismatch between the issues people are fighting over and what is at stake. Because what is at stake is really now the collapse of American democracy. What is at stake is the survival of the Western block, maybe even the survival of humankind, because if we don't have unity in the world, then we won't be able to solve climate change and the threat of AI and so forth. And to risk all that because of arguments over who can go to which toilet, this seems just incredible. You know, if we approach these hot topics, not with an intention of weaponizing them, but with goodwill, all of them people can reach compromises. Nobody would get everything they want, but they are all issues that people can actually compromise on because they are not, you know, the most fundamental issues for the survival of the community or of the country.

[01:03:18] Jordan Harbinger: I love hearing that because one of my primary questions here was I don't understand why instead of seeing the tension between left and right as something necessary to democracy, keeping one another in check, having different ideas coexisting on place.

[01:03:30] Yuval Noah Harari: Yeah.

[01:03:30] Jordan Harbinger: That used to be healthy. And now we're seeing people with different views as the enemy. And I wondered if that was normal through history because the differences in ideology don't seem greater now than they did in the past. They just maybe seem that way.

[01:03:43] Yuval Noah Harari: They seem smaller. If you think about the '60s, so the big arguments in the '60s about civil rights, about the sexual revolution, about the Cold War, the Vietnam War, they're much bigger in many ways, or much more consequential than what people are arguing about right now. And you had a lot more violence also in the '60s, you know, with assassinations of political leaders with riot in the streets. And nevertheless, people, for instance, did accept the results of election. The type of breakdown of the democratic institutions and traditions that we see today is in this sense, even more surprising. What is true is that when people start seeing their political rivals as enemies, democracy can be maintained.

[01:04:31] One of the things about democracy from historical perspective is that it's not always possible to have democracy. Democracy needs some quite rare preconditions in order to exist. Dictatorships you can have at any moment. You don't need preconditions. You can always have dictatorships, but democracy needs some preconditions. One of them is that people don't see their political rivals as enemies. You can think that they're stupid. You can think that they're misguided. You can think that they support the wrong policies, but they are not your enemies. They are not out there to destroy you and your way of life. If you start thinking that they are your enemies, that they want to harm you, then you will do anything to win the elections. And if you lose, you have no reason to accept the result because this is a war. And we need to find a way, both in the USA, also in my country of Israel, also in other democracies, to kind of go back to a situation when, again, you can think whatever you want about your political rivals, but don't see them as enemies. Because if that happens, you can have a civil war, you can have a dictatorship, or you can split the country, but you can't have a democracy.

[01:05:47] Jordan Harbinger: That's terrifying if dictatorship or authoritarianism is almost the default as long as we're not swimming upstream towards democracy.

[01:05:55] Yuval Noah Harari: Yeah.

[01:05:55] Jordan Harbinger: We're sliding back into one of those. That's a terrifying historical perspective because what that says is the second we sort of take our foot off the gas pedal back, we slide.

[01:06:05] Yuval Noah Harari: Yeah. Because again, the dictatorship is very easy and democracy is very hard. Because democracy is built on trust between people and trust between people and institutions. You need to trust your neighbors. You need to trust other members of the community. You need to trust a lot of institutions in order for democracy to function. Dictatorship, on the other hand, it functions on the basis of distrust. For a dictator, it's a good thing that people don't trust each other, that people don't trust what they hear on the news, that people don't trust the courts, because if people lack trust, they cannot unite against the dictator. You know, the most ancient trick is divide and rule. When people don't trust each other, that's the ideal situation for a dictator. When people trust each other, they can oppose the dictator, and this is the basis for a democratic system. But of course, trust is much, much harder to achieve than distrust. It takes years. To build trust between people and you can destroy this trust within a few days or even a few hours.

[01:07:15] Jordan Harbinger: That's a scary thought. But do you have hope for the future in terms of trust in terms of the West or the United States or rebuilding this in the next three minutes that we have here?

[01:07:26] Yuval Noah Harari: Yes. I have hope because the good thing about democracy is that it is much better than dictatorships at learning from its own mistakes and at trying something different. You know, in a dictatorship when a dictator makes a mistake or does something wrong, he is not going to admit it. There is no independent media that can expose it. There are no elections that you can replace him. Usually the dictator would just use his power to blame the problem on somebody else, on some enemy, some traitor and demand even more power for himself. So what you see over time is a dictatorship tend to amplify their own mistakes to repeat them and amplify them.

[01:08:08] In a democracy, on the other hand, there are self-correcting mechanisms that allow for identifying and rectifying the mistake. You have independent media, even if the president does something terrible, the media can expose it, the court can oppose it, and people can replace them in the next elections. So over time, even though in the short term, it seems that dictatorships are much more efficient because you know they're ruthless, they can work fast, we just need one person to make a decision, and the country changes its direction. In the long term, democracies are much better at learning from mistakes and changing course of action, which is why over time they perform better. Whether it'll happen again in this round, I don't know, but the kind of doom scenario, the end of the democracy, we have kind of accompanied the history of democracy for the last 200 years. You have these repeated crises when you think that this is it, democracy is over like what happened in the 1960s, but in the 1960s, you see the Western democracies within a very deep internal crisis, whereas the totalitarian regimes in places like the Soviet Union, they seemed completely stable that they will last forever. But in the end, it was the USSR that collapsed because it couldn't correct its own mistakes and adapt to new situations. Whereas Western democracies like the USA, they changed themselves. It was very difficult, but they succeeded in doing it. And this is how they eventually won the Cold War.

[01:09:50] Jordan Harbinger: That's a very hopeful scenario. Let's hope on this round of the dice roll, we come out on top. And I know we're out of time. It's so great to finally make this happen. I would love to do it again depending on how painful this conversation was for you, of course.

[01:10:03] Yuval Noah Harari: No, it was very, very pleasant. Thank you.

[01:10:05] Jordan Harbinger: And as our people are fond of saying next year or next time in Jerusalem, or maybe just Tel Aviv. But thank you very much and I hope you enjoy the next episode of Succession.

[01:10:14] Yuval Noah Harari: Thank you.

[01:10:16] Jordan Harbinger: You are about to hear a preview of The Jordan Harbinger Show about how you can be affected by ransomware and cyber attacks on the rise now all over the world.